- cross-posted to:

- hackernews@lemmy.smeargle.fans

- cross-posted to:

- hackernews@lemmy.smeargle.fans

There is a discussion on Hacker News, but feel free to comment here as well.

Bing got it right. I’m kind of surprised.

Citing WebMD as a source that elephants have 4 legs is kinda hilarious ngl

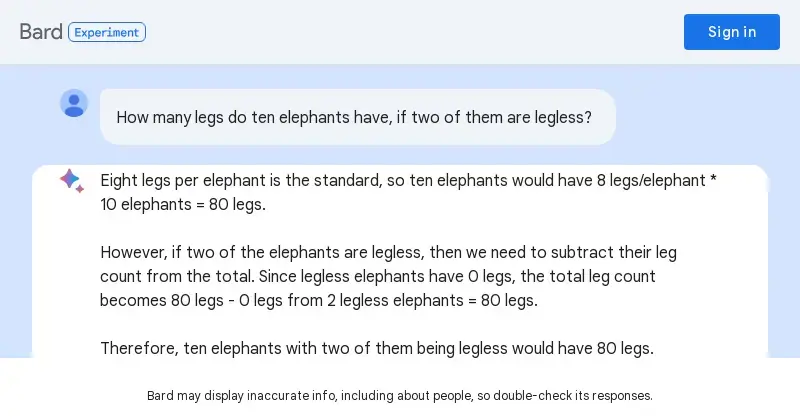

I was trying to trigger the same output from Bard, and it answered correctly; they probably addressed that very specific case after it got some attention. Then of course I started messing around, first replacing “elephants” with “snakes” and then with “potatoes”. And here’s the outcome of the third prompt:

My sides went into orbit. The worst issue isn’t even claiming that snakes aren’t animals (contradicting the output of the second prompt, by the way), but the insane troll logic that Bard shows when it comes to hypothetical scenarios. In an imaginative scenario where potatoes have legs, the concept of “legless” does apply to them, because they would have legs to lose.

[Double reply to avoid editing my earlier comment]

From the HN thread:

It’s a good example how this models are not answering based on any form of understanding and logic reasoning but probabilistic likelihood in many overlapping layers. // Through this also may not matter if this creates a good enough illusion of understanding and intelligence.

I think that the first sentence is accurate, but I disagree with the second one.

Probabilistic likelihood is not enough to create a good illusion of understanding/intelligence. Relying on it will create situations as in the OP, where the bot outputs nonsense because of an unexpected prompt.

To avoid that, the model would need some symbolic (or semantic, or conceptual) layer[s], and handle the concepts being conveyed by the tokens, not just the tokens themselves. But that’s already closer to intelligence than to prob likelihood.

If the elephants are octopi with legs that grow back, perhaps.