- cross-posted to:

- technology

- opensource@jlai.lu

- cross-posted to:

- technology

- opensource@jlai.lu

LLM scrapers are taking down FOSS projects’ infrastructure, and it’s getting worse.

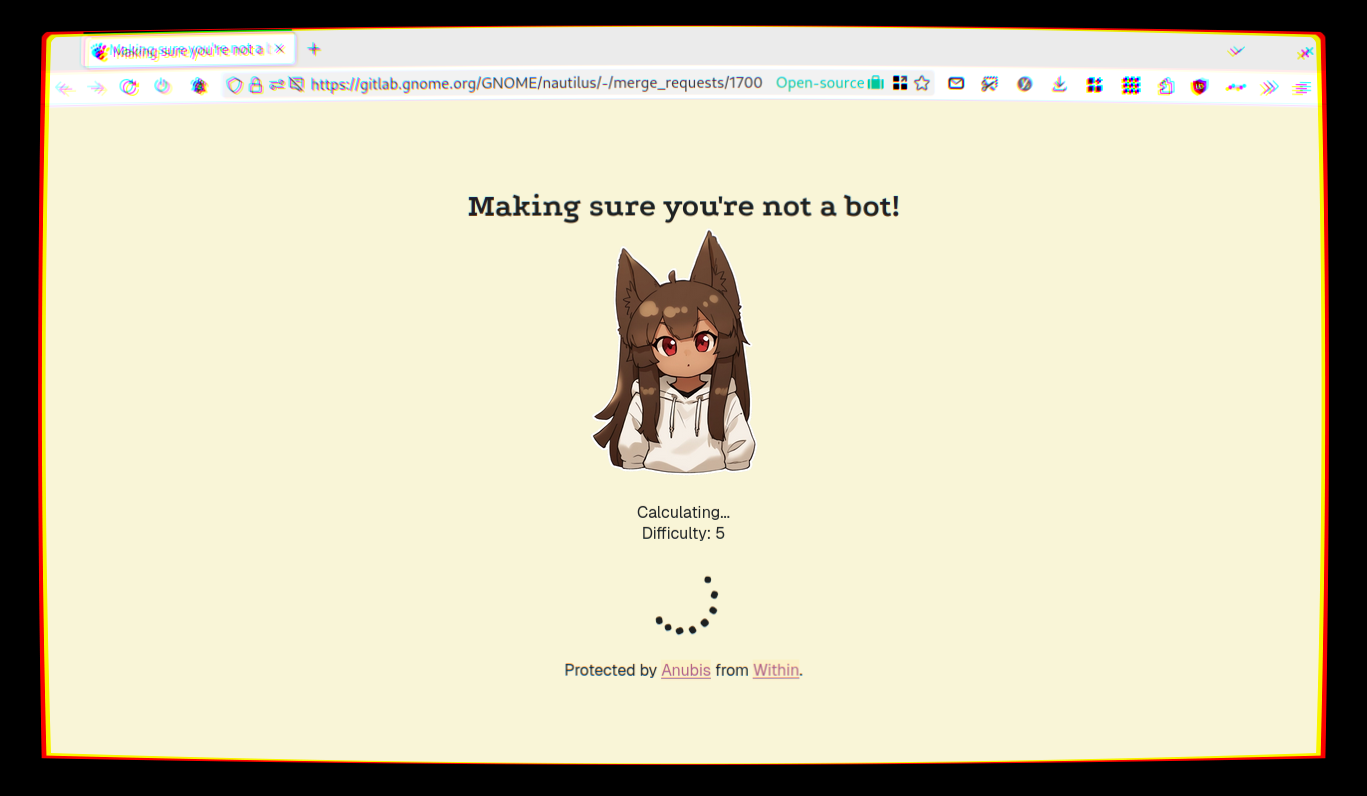

My girlfriend is gonna be mighty upset is she thinks I’m into that kinda thing. […] please change the image to something Gnome-related and/or trustworthy.

That’s an interesting takeaway from a DDoS issue

Wow that was a frustrating read. I dd not know it was quite that bad. Just to highlight one quote

they don’t just crawl a page once and then move on. Oh, no, they come back every 6 hours because lol why not. They also don’t give a single flying fuck about

robots.txt, because why should they. […] If you try to rate-limit them, they’ll just switch to other IPs all the time. If you try to block them by User Agent string, they’ll just switch to a non-bot UA string (no, really). This is literally a DDoS on the entire internet.

the solution here is to require logins. thems the breaks unfortunately. it’ll eventually pass as the novelty wears off.

Alternative: require a proof of work calculation.

This is exactly what we need to do. You’d think that a FOSS WAF exists out there somewhere that can do this

There is. That screenshot you see in the article is a picture of a brand new one, Anubis

Make them mine a BTC block in the Browser!

^Sorry, I’m low in blood and full of mosquito vomit. That’s probably making me think weird stuff.^

This is the most crazy read on subject in a while. Most articles just talk about hypothetical issues of tomorrow, while this one actually full of today’s problems and even costs of those issues in numbers and hours of pointless extra work. Had no idea it’s already this bad.

Whats confusing the hell out of me is: why are they bothering to scrape the git blame page? Just download the entire git repo and feed that into your LLM!

9/10 the best solution is to block nonresidential IPs. Residential proxies exist but they’re far more expensive than cloud proxies and providers will ask questions. Residential proxies are sketch AF and basically guarded like munitions. Some rookie LLM maker isn’t going to figure that out.

Anubis also sounds trivial to beat. If its just crunching numbers and not attempting to fingerprint the browser then its just a case of feeding the page into playwright and moving on.

I don’t like the approach of banning nonresidential IPs. I think it’s discriminatory and unfairly blocks out corporate/VPN users and others we might not even be thinking about. I realize there is a bot problem but I wish there was a better solution. Maybe purely proof-of-work solutions will get more popular or something.

Proof of Work is a terrible solution because it assumes computational costs are significant expense for scrapers compared to proxy costs. It’ll never come close to costing the same as residential proxies and meanwhile every smartphone user will be complaining about your website draining their battery.

You can do something like only challenge data data center IPs but you’ll have to do better than Proof-of-Work. Canvas fingerprinting would work.

My issue with canvas fingerprinting and, well, any other fingerprinting is that it makes the situation even worse. It plays right into the hands of data brokers, and is something I’ve been heavily fighting against, and simply don’t visit any website that doesn’t work in my browser that’s trying hard not to be fingerprintable.

Just now there is an article on the front page of programming.net about how are data brokers boasting to have extreme amounts of data on almost every user of the internet. If the defense against bot will be based on fingerprinting, it will heavily discourage use of anti-fingerprinting methods, which in turn makes them way less effective - if you’re one of the few people who isn’t fingerprintable, then it doesn’t matter that you have no fingeprint, because it makes it a fingerprint in itself.

So, please no. Eat away on my CPU however you want, but don’t help the data brokers.

Proof of Work is a terrible solution

Hard disagree, because:

it assumes computational costs are significant expense for scrapers compared to proxy costs

The assumption is correct. PoW has been proven to significantly reduce bot traffic… meanwhile the mere existence of residential proxies has exploded the availability of easy bot campaigns.

Canvas fingerprinting would work.

Demonstrably false… people already do this with abysmal results. Need to visit a clownflare site? Endless captcha loops. No thanks

The assumption is correct. PoW has been proven to significantly reduce bot traffic.

What you’re doing is filtering out bots that can’t be bothered to execute JavaScript. You don’t need to do a computational heavy PoW task to do that.

meanwhile the mere existence of residential proxies has exploded the availability of easy bot campaigns.

Correct, and thats why they are the number one expense for any scraping company. Any scraper that can’t be bothered to spin up a headless browser isn’t going to cough up the dough for residential proxies.

Demonstrably false… people already do this with abysmal results. Need to visit a clownflare site? Endless captcha loops. No thanks

That’s not what “demonstrably false” even means. Canvas fingerprinting filters out bots better than PoW. What you’re complaining about too strict settings and some users being denied. Make your Anubis settings too high you’ll have users waiting long times while their batteries drain.

What you’re doing is filtering out bots that can’t be bothered to execute JavaScript. You don’t need to do a computational heavy PoW task to do that.

Most bots and scrapers from what I’ve seen already are using (headless) full browsers, and hence are executing javascript, so I think anything that slows them down or increases their cost can reduce the traffic they bring.

Canvas fingerprinting filters out bots better than PoW

Source? I strongly disagree, and it’s not hard to change your browser characteristics to get a new canvas fingerprint every time, some browsers like firefox even have built-in options for it.

How much you wanna bet that at least part of this traffic is Microsoft just using other companies infrastructure to mask the fact that it’s them

I doubt it since Microsoft is big enough to be a little more responsible.

What you should be worried about is the fresh college graduates with 200k of venture capital money.

Sometimes, I hate humanity.

just hate the techbros

Can’t we just filter them out by iptables rules?

I’m perfectly fine with Anubis but I think we need a better algorithm for PoW

Tor has one now

Maybe it can be reused for the clearnet.

You mean the TOR project?

And Tor itself

It is part of the denial of service protection