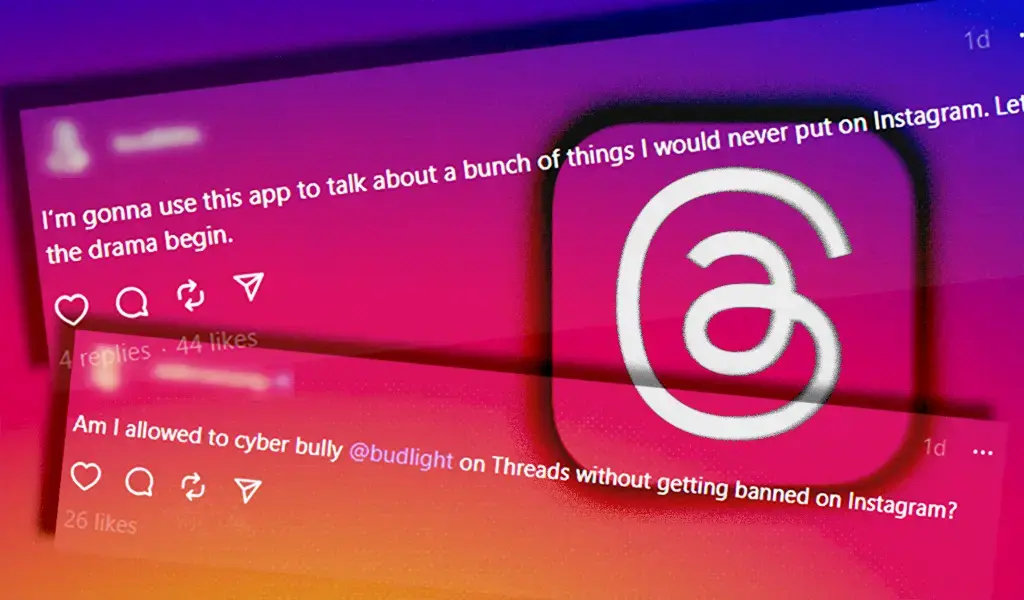

Hm, yeah I guess no one has been speculating about this part of the de/federate Threads reality. Everyone’s worried about Meta and EEE, but what we should have really been discussing is the history of Meta moderation and community guidelines which have often cited “free speech” when people use white supremacist dog whistling but cite “calls to violence” when people of color actively complain about white supremacy.

There’s a reason why we have seen news articles about large LEO Facebook groups trading and making joke comments on racist memes…

We were worried about the technology, but we should have been worried about cultural infiltration.

Exactly. What happens when a far-right troll like libsoftiktok sics thousands of rabid followers on a fediverse account? I get the feeling our small, volunteer group of moderators just don’t have the resources to cover that kind of brigading.

Also, I don’t think moderation can even stop brigading or the downvotes to hell avalanche. It could only stop thread and comment creation on just your one community/magazine on your instance.

Nothing could stop a bad faith actor from finding my comments on a different instance and harassing or brigading me there if that instance federated with Threads, even if my instance defederate from Threads.

This Fediverse stuff is… complex.

Well, at least downvotes isn’t going to be much of a problem, as threads users will only be capable of upcoming stuff they see here. They don’t have a downvote button. :)

They will be able to send swarms of trolls to harass. If Threads does even federate, I suspect even admins who didn’t sign the fedipact will defederate quite fast.

The way the Fediverse is designed you need to actively seek out content. It’s not going to be all that easy being a troll from threads attacking content on the Fediverse.

What I could imagine is within LGTBQIA+ hashtags and other culture war dimensions, that if it’s federated the bigots might seek it out.

Then again, if that proves to be a problem, sites like Blahaj will probably be pretty darn quick to defederate. And this type of content, even when posted by kbin or Lemmy.world users or whatever, will probably often take place in communities hosted by instances like blahaj. So the thread trolls would find themselves isolated from the discussion pretty fast.

On the other hand, there’s a bunch of queer people who use threads. If all servers immediately defederate from it, these people will never get to have a glimpse into the fediverse. They could benefit a lot from joining a different platform, but if we focus only on the bigots we’ll end up never reaching them.

The same logic of course applies to other communities affected by the anti woke culture war bullshit, I’m just too lazy to come up with a more original example. :)

I don’t know, a lot of us found our way here from Reddit and Twitter without being federated.

That’s different though - it’s going here and actively creating a user and settling. Interactions with Mastodon users are mostly limited to special interest groups and microblogs I feel, even though we’re all in the same network.

For what it’s worth, LibsOfTikTok’s already getting slapped by Threads’s moderation.

Nope, she has repeatedly had posts reinstated after being initially flagged for hate speech, including that one. Meta knows their audience.

Ah, damn. Should’ve figured it was too good to be true if she was posting it.

Facebook’s moderation only covers the bare minimum. Simple mention of Hitler can get you banned (even if you’re criticizing him), calling all LGBTQ people pedophiles and the likes are de-facto allowed there. Threads’ moderation is pretty much the same from what I’ve heard.

Oh, we haven’t been speculating about moderation because that’s a known quantity. A major driver of defederarion discussion on the microblogging side of the fedi has been about the moderation issues that people would have to deal with if federated with Threads. And especially about bad actors on Threads getting posts from users on defederated instances via intermediary sites, and then spotlighting vulnerable people to trolls on other instances.

It’s why many niche Mastodon instances are talking about defederating from any other site not blocking Threads. It’s a significant mental safety risk for vulnerable people in the alt-right’s sights.

I’m not an “early adopter” of the Fediverse per se, but I came over on the reddit migration on June 11. I feel like I’ve been an information sponge trying to wrap my head around the organization of the Fediverse and seeing the benefits. I think I’m pretty up to speed, at least enough to discuss it with people offline and explain it in a way that does it some justice.

But I don’t think I’ve seen a lot of discussion about the drawbacks of the Fediverse. I’ve seen a few threads about major privacy concerns related to the Fediverse, but most of the comments responding just kind of hand wave the issue.

Seeing a possible larger issue here regarding the moderation issues, I can’t see anything other than a total containment of Threads away from other instances. Like, great - use ActivityPub, but don’t talk to me (kbin.social) or my child (literally everything else that wants to interact together in the Fediverse with kbin) again. Lol

The thing is, because minority-targeting trolls aren’t taken seriously by any corporate social media platform, there’s no big downside compared to them. It’s just that them showing up here is effectively taking the safer space these communities they’ve built away from them, returning things to basically how they were just before they fled those other spaces.

They were made safe not due to the tools, but due to obscurity, and they’re about to lose that obscurity.

This is… I don’t want to call it a “good thing”, because people who have suffered many assholes suffering them all over again is in no way, shape, or form good, but it’s highlighting an issue that’s been clear to these communities, but not to developers on the Fediverse: The moderation tools here are hot, sweaty garbage.

Hopefully we can see serious movement on making useful tools now.

I don’t know if you have history on reddit, but the “safety because of obscurity” and having that taken away by increased visibility is absolutely what I lived through as a member of a subreddit called TwoXChromosomes. TwoX was a really welcoming space for women-identifying people to get a breath of fresh air from the constant “equal rights means equal lefts” kind of casual misogyny on the rest of reddit. And then corporate created the “default sub” designation and put TwoX on the list.

I remember the moderators at the time making it very clear to the community that they voiced their dissent but it was happening anyway (wow, what does that sound like?) and now a lot of the posts there get inundated with “not all men” apologists and all the OPs have reddit cares alerts filed on them.

@MiscreantMouse from my post and upvote history you can verify that I’m pretty in defensive of Meta federation because I think cutting them off immediately is against the spirit of open protocols. Their poor moderation would be an extremely legitimate reason to defederate. I’m against the defederation pact to fully cut them off before they even enter the fediverse but cutting them off as a pragmatic response to their actual character once they arrive us completely justified.

The thing is, Facebook already exists. We have no reason to believe that they would moderate any differently with Threads. I haven’t been on facebook in 10 years and I don’t want to be there again.

New nazi bar over there apparently.

Pretty low at that.

I think they are referring to a bar for drinking, and more specifically this post: https://twitter.com/IamRageSparkle/status/1280891537451343873?s=20

If you cant open it due to twitter shinenigans, here is a random website they basically screenshotted the entire post but wrote a lengthy introduction for search engine optimization: https://www.upworthy.com/bartender-explains-why-he-swiftly-kicks-nazis-out-of-his-punk-bar-even-if-theyre-not-bothering-anyone

You’re right…I misinterpreted the post. Thanks for the clarification.

I’m familiar with the bartender copy pasta. It made the rounds on Reddit a fair amount! Link is still appreciated for anyone that’s not.

Boy, Twitter’s UI is hot garbage. The replies to the original poster made his series of tweets about the bar impossible to read.

White supremacists are like that guy nobody ever wants at their party but who always invites himself anyway. It’s hard enough to keep him from washing his balls in the punch bowl when you’re actively trying to keep him out. Meta doesn’t even try except to the meager extent required by law.

Yes, bigots are bad. And if you see a bigot on the internet, you don’t have to click on their profile or view anything they put out into the void…And it is a void by the way, the amount of people that their content appeals to is a very small number of people.

So what’s the harm in them having a platform if hardly anyone will even pay attention to them?

dealing with someone who physically shows up to your place unwanted and uninvited isn’t the same thing as allowing them to tweet mean things.

I think I get what you’re saying, and there was a time when I would’ve agreed. I spent more years than I care to admit on 4chan, years I wouldn’t have spent if I didn’t think there was some value to people expressing their opinions no matter what they were. But…I dunno man, it’s not a ton of people, but I wouldn’t call it a “very small” number of people. Also the issue I’m getting at isn’t that they have a platform, it’s that if you let them they will try to make every platform their platform. And if it’s an organized group they will do so in an organized way that is not the same as Uncle Ted cocking off about immigrants again or whatever.

You’re correct that you don’t have to look at their profile, any more than you have to drink the pube punch. The issue isn’t that I had to see the words of meanies. The issue is that allowing white supremacists to use your platform a) makes it look like the platform condones such things, which reflects both on the platform and the other users, which may cause the non-extremist users to leave if it gets bad enough, this tipping the balance of users more in the extremists’ favor; and b) encourages people who agree with them. And the number of people who think certain people shouldn’t have rights doesn’t have to be very big for them to decide to organize and do something about it, including egging others on.

Also you mentioned tweets, so I should apologize for not clarifying before. When expressing concern over extremists inviting themselves, I was not thinking about Twitter so much as I was thinking about the fediverse. I’m more concerned with what people are trying to build here than with whatever it is they’re doing at Twitter these days. Elon’s gonna Elon and we can’t control that. We can, though, choose what company we keep here.

Literally why

They already have Truth Social and Twitter.

there aren’t minorities in those places for them to attack, which is what they want to do

Facebook is also a big gathering place for white supremacists, anti-LGBT, and other conservative extremists. It’s largely where the US Capitol insurrection was organized. Meta is no stranger to fascism.

Hitler already had Germany. Why’d he want the Czech Republic and Poland?

They’re crusaders. They’re never content with what they have. There has to be some land to conquer, some people to oppress. If there isn’t, they’ll just look inward and find one.

That’s what I expected from the start.

I guess I just assumed that that was commonly understood, As soon as I saw that it was going to be run according to Facebook’s moderation standards, I took that to mean that it was going to be tailored to suit white supremacists and Christian nationalists, like Facebook.

Back when I used facebook, I barely ever saw any bigots…mainly because I never went looking for that kind of content on any website I’ve ever been to.

The real reason why Facebook sucks is because they collect literally everything they can about your entire life, they look through your files on your local storage. And if you block any of their spying, they’ll want to see your ID and SSN.

There’s bigots on every platform. Have you ever read any youtube comment sections ever?

Your activity on this thread implies you have a vested interest in downplaying Facebook’s bias. I’m not sure why…

Anyway - of course there are bigots on every platform, and of every stripe for that matter. The internet has enabled bigotry on a scale never before imagined, and the steady slide of much of modern civilization, and the US and UK (and lately Canada) in particular, toward overtly corrupt overt plutocracy has left more people than ever desperate for some way to assure themselves that everything wrong with the world is somebody else’s fault, which makes bigotry a growing enterprise.

But while bigotry is ever-present, each individual site has its own expressed bias for the particular forms it’s more or less likely to at least tolerate, if not actively encourage.

And Facebook’s expressed bias is toward white supremacism and Christian nationalism.

Your inference is wrong. Facebook is terrible.

The genocide of the rohyinga people was largely organized on Facebook. Meta is not to be trusted with any of this shit.

@MiscreantMouse This is why I’m of the opinion that defederating from anything that smacks of Meta or Threads should be done immediately. Zsuck supports Russian bots, Alt-right Insurrectionists and hate speech and has done so since 2015, in other words, longer than Elon. Should be walled off and removed like a cancerous tumor. In my view, that should include any instance that signed an NDA with them.

I saw a survey of instances that indicated many are taking a “wait and see” approach, which is mystifying. What do people think they are find that they don’t already know about Meta?

Supporting free speech means allowing people you hate to talk too. Censor a Nazi one day, then the next day it’s something your weird friend likes, then the next day it’s something you like.

Everyone deserves a platform online, but they have to earn their audience. Censoring them is only going to make more people want to go to other platforms to hear and see what they have to say.

I am not required to respect “free speech” when it comes from a place of fundamental dishonesty. Slander is not protected speech. They are within their rights to bitch and complain about whatever non-issue they’re up in arms about today and I’m within my rights to ban and ignore them.

They are, notably, NOT within their rights to call for violence and death against LGBTQ+ folks, which many are doing, because that constitutes hate speech, assault, or even inciting a riot, depending on which particular situation you find yourself being a bigot in. All three of these are illegal and are not protected speech.

Tolerance of intolerance is not a paradox, it is a failing of the people who are supposed to be protecting their communities. Tolerance of Nazis and racism are not required by the tenets of the Constitution or by the tenets of democracy and instead actively erode the protections enshrined within each.

In short, Nazi punks, fuck off.

You’re right, Slander isn’t protected speech. But to prove that it’s slander, you need to prove that it damaged you and you need to prove that it was said with malicious intent. You also need to prove that the statement isn’t true.

Who’s going to take an actual nazi seriously when they have their stupid little protests against jewish people? Not that many…at least not once they find out that they’re a nazi.

That’s just common misconception. Free speech is there to protect people from the government, not business. If my anti-racism voice gets suppressed on Threads (assuming I ever make an account there) I’d just move to another platform.

And really, there’s no good reason for a well-intended internet community to allow racism expand.

Racism will expand if you censor it.

How many racists have a big audience? And I mean openly, explicitly racist. Not the dog-whistle racism from Fox news.

People have been censored by automated systems for just criticizing racists. Yes, that means that all the people who call them out for being shitty get censored too.

Racism will expand if you censor it.

Literally the exact opposite is true. Deplatforming bigots limits their audience, and limit’s their ability to propagandise.

It doesn’t mean you have to give them the platform, though. If they want to create their own Nazi federation that’s entirely on them, but you don’t have to integrate their content.

If these companies are going to control what’s on their platform then they shouldn’t get a liability shield.

They’re a bookstore censoring the content of the books they have in the store.

If you don’t like what someone has to say online you don’t have to click on their profiles or follow them or read what they’re saying.

such a slippery slope! supporting free speech means allowing people to talk about how much they want queer people dead, too. tell the people calling for violence against queer people to fuck off, and maybe one day your very own calls for violence might get told to fuck off!

everybody deserves a platform to call for the extermination of people groups, but they have to earn their audience 😏. i think we should do absolutely nothing to stop them, because doing anything just makes them stronger anyways. /s

There’s already laws on the books for the things you mentioned, that’s not what I was talking about at all.

The right to free speech is drawn from a US constitutional amendment, which says the US government can’t censor speech, but it has nothing to do with private platforms like this, much less individual responses to Nazi rhetoric. Nobody owes hate speech a free platform.

But these private platforms have a liability shield. If they have a liability shield, they shouldn’t be allowed to censor things.

they shouldn’t be allowed to censor things

I disagree, and so does US law. Abusive material shouldn’t be spread just because it can be.

Illegal shit is already illegal. That’s not what I was talking about.

You missed the point, but whatever, you don’t get to force private platforms to host content, that’s up to the owners.

If they get a liability shield, they shouldn’t get to control what happens on their platform.

Quite a sentence. I guess that’s where I’m going to stop taking you seriously.

Free speech has always had limits.

It’s mostly about the government not arresting you for what you say. It doesn’t protect you from the consequences of saying hateful things in a public space. Say something racist in an area largely populated by the race you’re talking about and you’re likely to get kicked, post some right wing misinformation in an online space that is largely left-leaning and you’re likely to have your post deleted. Neither of those things infringe on anyone’s right to free speech because other people also have the right to not want to listen to Nazis or racists or TERFS etc.

Disagree. Being absolutist with free speech because we can’t trust bad faith actors to honor boundaries is not going to work, because they don’t care about their own hypocrisy.

Advocating genocide is a beyond free speech. And that’s what nazi ideology, and fascism in general, do.

Title could easily be changed to “Facebook and Instagram users move to Threads” and it would mean the same thing.

This would be the strongest reason for instance admins to not let Threads to federate with their instances.

Yeah there’s a few of those types here too unfortunately.

Hopefully not on beehaw. That’s the beauty of federation. We can pay our mods, AND mod the way we want.

There will be bad actors of all descriptions on all platforms. The key is how effectively they are dealt with. We’ll see on that.

In other news, idiots exist everywhere. The interesting part will come when meta/threads responds to this.

I would be surprised if they respond any different than before, i.e. almost none.

Welp.

They flock everywhere that will have them. There are definitely far-right shitstains on every platform, even this one. They’re like a gas and expand to take up whatever volume they’re in, or in this case, that doesn’t ban them. Simply “flocking” to a platform isn’t really an indictment.

Now where they stay, that’s a different matter.

When Stinky Elon took over twitter, I kept hearing everyone complain about all the bigots on there. But I haven’t seen any bigots on there. Why? Because I’m not following any of them and I’ve never gone looking for them

Good for you but don’t take that for granted. People who just happen to have a little bit of a following and openly exist as minorities in social media regularly get bigots coming out of the woodworks to harass them.

- skymtf ( @skymtf@pricefield.org ) 3•1 year ago

They existed far before elon, libs of tiktok was put on a do not take action list by the former admin. Secondly the algrithum ensured I always knew hate accounts existed.