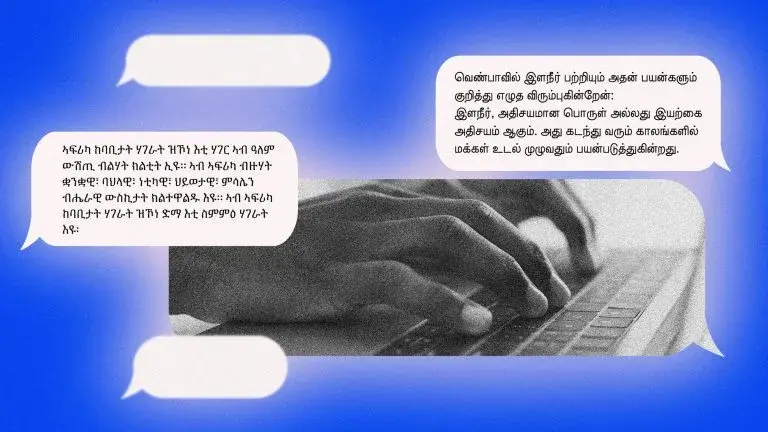

Outside of English, ChatGPT makes up words, fails logic tests, and can’t do basic information retrieval.

ChatGPT is not only made for information gathering, though

I’d argue that it is not made for information gathering at all, and it is largely coincidental that it performs as well as it does even in English.

Our CIO at work posted a warning about using ChatGPT on sensitive data. The shocking part was that in the set of examples for why we might be using ChatGPT already he mentioned “for performing a quick fact check”, which is insane to me. Who would use the system that is know to just generates likely answers even if they are untrue, for a fact check of all things?!

Machine learning is only as good as the dataset it has, and given that english has a HUGE data set on the internet, its okay at it, but it makes sense that for other languages, its likely not ideal.

An example would is art. Look up one using a smaller data set (e.g fully legal ones where all training data had artist permission) vs ones trained on the larger dataset where legality wasnt a concern. Night and day difference

ChatGPT is actually able to translate the information it learns in one language into other languages, so if it’s having trouble speaking Bengali and such it must simply not know the language very well. I recall a study being done where an LLM was trained up on some new information using English training data and then was asked about it in French, and it was able to talk about what it had learned in French.

Of course. But with translations, brings mistranslations, especially with tier 3+ languages to learn as a english speaker. The data is subject to the accuracy of the translation, and Chat GPT translation is still pretty far from perfect.

Ah, I had interpreted your comment to mean that you thought ChatGPT wouldn’t know how to answer a question in Bengali unless the information it needed to solve the problem had been part of its Bengali training set. My bad.