- cross-posted to:

- auai@programming.dev

- cross-posted to:

- auai@programming.dev

I have a hard time seeing what we are currently calling “AI” evolve to address issues like this. It’s not real intelligence, it’s just text prediction. It seems fundamentally flawed for use-cases where you need 100% certainty of the answers being appropriate.

This isn’t the AI people think it is. And the only danger it poses is irresponsible use.

Part of why the NLP community is so excited about it is because text prediction as an optimization problem eventually necessitates some form of intelligence in order to reduce the loss, and the architectures we’re using scale nearly linearly in quality vs size and show no real signs of diminishing returns, meaning you can make them arbitrarily smart just by making them bigger.

I would encourage you to consider what you mean by “real” intelligence and “just text prediction”, because AI throws a lot of our assumptions out the window. Talk to GPT-4 in a chatbot cognitive architecture for a few hours and you get a sense of just how intelligent it can be (with the right prompting), but the architecture itself is literally incapable of “thinking” (some wiggle room for inter-layer states) - that is, internal, stateful, causal processes which drive external behavior. A chatbot CA can vaguely approximate it via chain of thought prompting, but without that it essentially has to guess what its thoughts “would” be if it had them, which is very weird and hard to understand intuitively.

In case it isn’t clear, what I mean by “cognitive architecture” is the machinery surrounding the language model which lets it interact with the world. A language model in isolation is a causal autoregressive inference engine that will happily autocomplete anything. They are not chatbots, only components in chatbots - that’s just the modality we’re most familiar with because ChatGPT broke ground, but it’s not their only or even most useful form. The LLM is comparable to a human broca’s area, which will generate an endless stream of language if you let it. It’s the neural circuitry around it which give rise to coherent thoughts and subjective experience.

To be able to accurately discuss these concepts, we need to change the language we use. Words like “intelligence”, “consciousness”, “sentience”, “sapience”, etc have always been incredibly vague, approaching completely undefined. They can’t be adequately applied to AI until they’ve been operationalized, such that you could objectively falsify whether or not they apply to a given system.

Very nicely put. If I observe any real person replying in text, what im seeing is essentially just them thinking about what word to put next and entering it on the keyboard. It is an extremely complex task. I’m not saying that state of the art language models are also mulling the same thoughts in their “minds” like we are but that they are solving the same problem. And our current paradigm of training these models show no sign of slowing progress so I understand the sentiment that calling these models just “text prediction machines” is too simplistic.

This isn’t the AI people think it is.

It’s definitely not as good as people think it is. The best description I heard was that AI outputs “hallucinations” as it only needs to look plausible, it doesn’t have to be right.

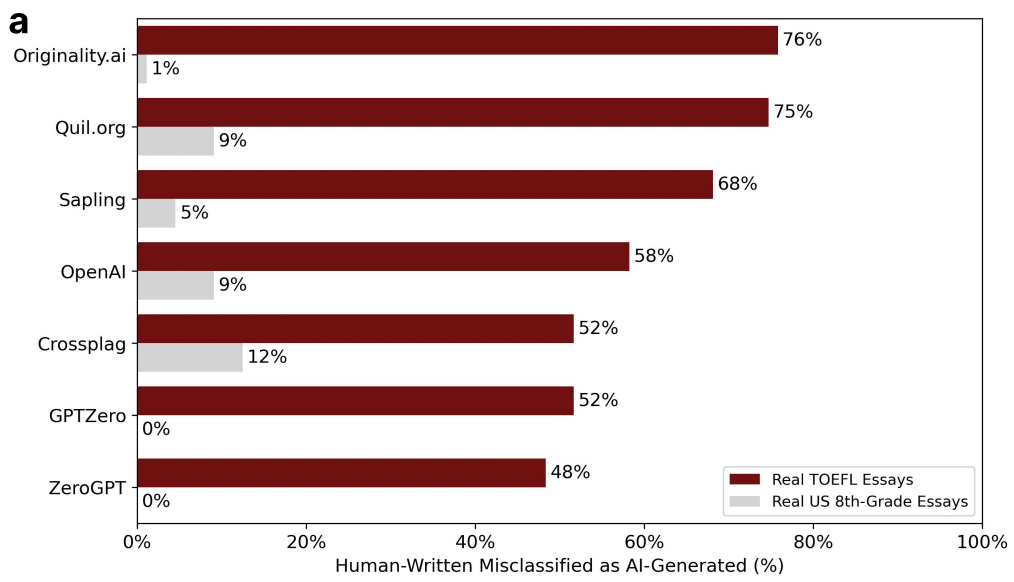

Which is why using it to detect cheating is a concern - you’d hope that it would only be used for a first pass only to be reviewed by a human later but some people are going to think that AI is infallible and leave it there.

Stephen Wolfram’s article on how ChatGPT works was enlightening: https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/

Like you said, it’s just text prediction, using online content as the training ground.

Yea, this AI is good for writing unimportant stuff like “talking to” famous dead people, or D&D descriptions on the fly. It can be useful for basic coding if you know how to fix its mistakes. Oh and keeping telemarketers busy a la Jolly Rodger. And I guess spam blog posts.

It’s best used as a toy still, after I tried to use it to augment my work, it’s just usually worse than a good search engine still in terms of answering questions.

The free image generators are pretty impressive again for like making flavor art for D&D on the fly, or just if you’re not an artist. Some of the tuned ones can make decent unconnected art or fake pictures, but so far I don’t think you can pick a character you create and like get it to make a graphic novel with it.

So - watch out people who make RPG modules I guess.

Yeah, gaming and the arts are where I can see this AI shine. Aside from mundane artistry, I don’t think anyone needs to be worried about their job. This AI isn’t going to steal your job because once again; it has no real intelligence. It requires an intelligent person to steer it and process its results. It’ll only cost you your job if you don’t evolve to use this new tool.

But it is intelligence. Just a very different form than we are generally used to. It’s not entirely trustworthy or accurate in it’s output yet, but that’s ok for what is effectively early stage AI. humans have never been fully functional or reliable, but can still be useful. We have fully functional agents capable of doing complex things like building a functional computer out of eBay listings, or ordering a pizza in the style you request. I’ve trained less reliable or capable human beings. It is not sentient, it is not perfect or completely reliable, but it is more than just a parrot. It is capable of creating and responding to some novel situations. Of course there is still a lot more to be worked on.

Do you think there is no stochastic element to our natural use of language? Are you never confused by a word that came out of your mouth that upon immediate reflection isn’t a word you would have intended to say at all? What we have built is just a piece of the puzzle, but it’s not stopping there.

There is a lot of work to be done in mechanistic interpretability and alignment. users also need to understand the abilities and limitations of the tool, but it’s absurd not to be impressed and excited by the current state of neural networks.

Edit: apparently consciouscode said it all and better. I should read more replies before creating my own. Still, very excited for the near future with this technology.

I’ve seen so many horror stories of profs and teachers who can’t adapt just burning any and all good will with their students by failing people on the whims of these stupid checkers as well.