- cross-posted to:

- technology

- cross-posted to:

- technology

sometimes this works out well though. I like when I get say black sailor moon:

Sailor moon, you ain’t got no hands!

Workaround for fingers having the wrong count.

That would be a reason to scream…

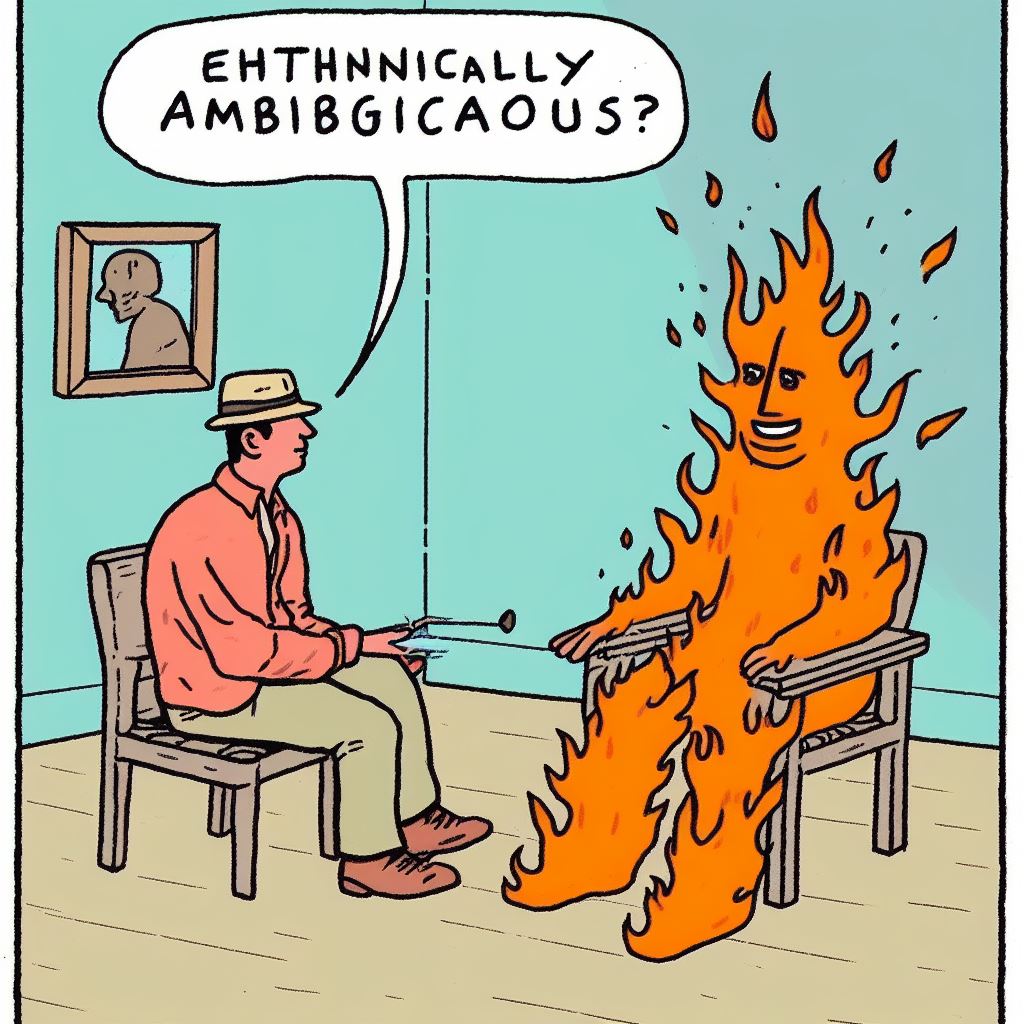

thanks that’s a big collection of ethically ambiguous memes ^^

Homer? Who is Homer? My name is “Ethinically Ambigaus”

I’ve been encountering this! I thought it was the topics I was using as prompts somehow being bad – it was making some of my podcast sketches look stupidly racist, admittedly though some of them it seemed to style after some not-so-savoury podcasters, which made things worse.

I think I just found the name for my Tav in my upcoming Durge run of Baldurs Gate 3.

“Ron stood there with his Ron shirt.”

That explanation makes no fucking sense and makes them look like they know fuck all about AI training.

The output keywords have nothing to do with the training data. If the model in use has fuck all BME training data, it will struggle to draw a BME regardless of what key words are used.

And any AI person training their algorithms on AI generated data is liable to get fired. That is a big no-no. Not only does it not provide any new information from the data, it also amplifies the mistakes made by the AI.

They are not talking about the training process, to combat racial bias on the training process, they insert words on the prompt, like for example “racially ambiguous”. For some reason, this time the AI weighted the inserted promt too much that it made Homer from the Caribbean.

They are not talking about the training process

They literally say they do this “to combat the racial bias in its training data”

to combat racial bias on the training process, they insert words on the prompt, like for example “racially ambiguous”.

And like I said, this makes no fucking sense.

If your training processes, specifically your training data, has biases, inserting key words does not fix that issue. It literally does nothing to actually combat it. It might hide issues if the data model has sufficient training to do the job with the inserted key words, but that is not a fix, nor combating the issue. It is a cheap hack that does not address the underlying training issues.

but that is not a fix

congratulations you stumbled upon the reason this is a bad idea all by yourself

all it took was a bit of actually-reading-the-original-post

?

My position was always that this is a bad idea.

the point of the original post is that artificially fixing a bias in training data post-training is a bad idea because it ends up in weird scenarios like this one

your comment is saying that the original post is dumb and betrays a lack of knowledge because artificially fixing a bias in training data post-training would obviously only result in weird scenarios like this one

i don’t know what your aim is here

You started your initial rant based on a misunderstanding of what was actually said. Stumbling into the correct answer != knowing what you’re reacting to

Yes. The training data has a bias, and they are using a cheap hack (prompt manipulation) to try to patch it.

Any training data almost certainly has biases. For awhile, if you asked for pictures of people eating waffles or fried chicken they’d very likely be black.

Most of the pictures I tried of kid-type characters were blue eyed.

Then people review the output and say "hey this might still racist, so they tweak things to “diversity” the output. This is likely the result of that, where they’ve “fixed” one “problem” and created another.

Behold, Homer in brownface. D’oh!

any AI person training their algorithms on AI generated data is liable to get fired

though this isn’t pertinent to the post in question, training AI (and by AI I presume you mean neural networks, since there’s a fairly important distinction) on AI-generated data is absolutely a part of machine learning.

some of the most famous neural networks out there are trained on data that they’ve generated themselves -> e.g., AlphaGo Zero

They could try to compensate the imbalance by explicitly asking for the lesser represented classes in the data… It’s an idea, not quite bad but not quite good either because of the problems you mentioned.

The number of fingers and thumbs tells me this maybe isn’t an AI image.

Nah, AI has just gotten really good at hands. They can still be a bit wonky, but I’ve seen images, hands and everything, that’d probably fool anyone who doesn’t go over the picture with a magnifying glass.

Imagine the tons of hands pictures they fed ai models just to combat that

Imagine the sheer number of fingers

More hands than your mum took.

Sorry, I couldn’t resist.

Look at the hands in the upper corners, looks pretty AI-ish.

Yeah, those thumbs are not proportional to the wrist. And now I look again, bottom right and bottom left have an extra nub.

Word, here’s a prompt with dalle3 that also said something similar.

Was this your prompt? This seems to happen a lot then

It wasn’t in the prompt. I actually asked for him to be telling the therapist “I just want to be put out”.