See THIS POST

Notice- the 2,000 upvotes?

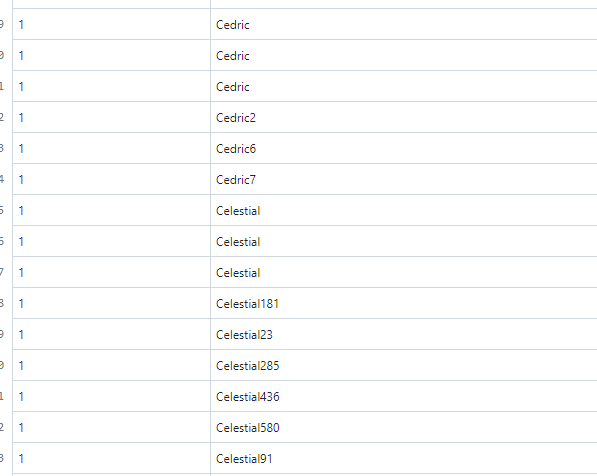

https://gist.github.com/XtremeOwnageDotCom/19422927a5225228c53517652847a76b

It’s mostly bot traffic.

Important Note

The OP of that post did admit, to purposely using bots for that demonstration.

I am not making this post, specifically for that post. Rather- we need to collectively organize, and find a method.

Defederation is a nuke from orbit approach, which WILL cause more harm then good, over the long run.

Having admins proactively monitor their content and communities helps- as does enabling new user approvals, captchas, email verification, etc. But, this does not solve the problem.

The REAL problem

But, the real problem- The fediverse is so open, there is NOTHING stopping dedicated bot owners and spammers from…

- Creating new instances for hosting bots, and then federating with other servers. (Everything can be fully automated to completely spin up a new instance, in UNDER 15 seconds)

- Hiring kids in africa and india to create accounts for 2 cents an hour. NEWS POST 1 POST TWO

- Lemmy is EXTREMELY trusting. For example, go look at the stats for my instance online… (lemmyonline.com) I can assure you, I don’t have 30k users and 1.2 million comments.

- There is no built-in “real-time” methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don’t think it is even exposed through the rest api.

What can happen if we don’t identify a solution.

We know meta wants to infiltrate the fediverse. We know reddits wants the fediverse to fail.

If, a single user, with limited technical resources can manipulate that content, as was proven above-

What is going to happen when big-corpo wants to swing their fist around?

Edits

- Removed most of the images containing instances. Some of those issues have already been taken care of. As well, I don’t want to distract from the ACTUAL problem.

- Cleaned up post.

See the original post. (may have changes’ since you read it)

I can spin up a fresh instance in UNDER 15 seconds, and be federated with your server in under a minute.

There is literally nothing that can be done to stop this currently, unless servers completely wall themselves from the outside world, and follow a whitelisting approach. However, this ruins one of the massive benefits of the fediverse.

And I can blacklist your instance in less than 5 seconds. We have the answer. Administrators of instances have the power to do whatever disposition they want already.

Quit being a twerp, and work with us.

First, you have to IDENTIFY the bad-instances. Have a tool for that? Have a method to filter out good from bad?

No. You don’t.

Yes I do. Because I actually understand how servers work. If your just running Lemmy with no understanding of how the internet works… then you’re doing yourself a disservice.

Edit: Oh I missed this the first time I read it…

Yeah no. I have no interest to work with leeches that don’t understand how to run services. Let alone ones that jump straight to ad hominem.

Then seriously, go fuck off back to your server, and don’t come fussing when you get overran by bots.

Wait- why are you even in this conversation? You have two users… and four posts.

Oh, and you even subscribe to lemmygrad.nl, and other extremist instances.

https://cloud.saik0.com/s/MJZpAwRJ3Enz68p/download/firefox_GHUr4zJzSn.png

Now you’re lying?! LMAO. Alright.

Lmao. You’re not even a user of lemmy.ml, the site you’re actually posting to. I’ll do whatever I want because that’s the point of the fediverse. Feel free to actually use the functions of lemmy and I dunno… block me… or if you’re an admin on your instance, defederate/block mine. It’s really simple if your feelings are hurt to find your own solution without resorting to name calling and hostility. You choose hostility, and that just shows how disgusting your behavior is.

It’s not my fault you don’t understand how firewalls, proxies, reverse proxies, web servers, http, or lemmy itself actually works. There’s SO many answers to these problems already out there but people like you act like it’s the end of the world.

It won’t happen. Because I actually understand how to run services like this.

And- blocked. Have a nice time.

Yeah, setting up new instances is a different issue, of course. And there is definitely a lack tools to help with that as of yet. We need things like rate limiting on new federations, or on unusual traffic spikes, mod queues for posts that get caught up in them. Plus the ability to purge all posts and comments from users from defederated sites.

Among other things.

There are two worries here:

Bots on established and valid instances (Should be handled by mods and instance admins, just like conventional non-federated forums. Perhaps more tooling is required for this— do you have any suggestions? However, I think it’s a little premature to say that federation is inherently more susceptible or that corrective action is desperately needed right now.).

Bots on bot-created instances. (Could be handled by adding some conditions before federating with instances, such as a unique domain requirement. Not sure what we have in this space yet. This will limit the ability to bulk-create instances. After that, individual bot-run instances can be defederated with if they become annoyances.)

I can think of a way to help with the problem, but I don’t know how hard it would be to implement.

Create some sort of trust score, where instance owners rate other instances they federate with.

Then the score gets shared in the network. Like some sort of federated whitelisting.

You would have to be prudent a first, but not do the whole task yourself.

You could even add an “adventurousness” slider, to widen or restrict the network based on this score.

@db0@lemmy.dbzer0.com is more or less building something exactly like this.

his comment HERE https://lemmyonline.com/comment/58296