See THIS POST

Notice- the 2,000 upvotes?

https://gist.github.com/XtremeOwnageDotCom/19422927a5225228c53517652847a76b

It’s mostly bot traffic.

Important Note

The OP of that post did admit, to purposely using bots for that demonstration.

I am not making this post, specifically for that post. Rather- we need to collectively organize, and find a method.

Defederation is a nuke from orbit approach, which WILL cause more harm then good, over the long run.

Having admins proactively monitor their content and communities helps- as does enabling new user approvals, captchas, email verification, etc. But, this does not solve the problem.

The REAL problem

But, the real problem- The fediverse is so open, there is NOTHING stopping dedicated bot owners and spammers from…

- Creating new instances for hosting bots, and then federating with other servers. (Everything can be fully automated to completely spin up a new instance, in UNDER 15 seconds)

- Hiring kids in africa and india to create accounts for 2 cents an hour. NEWS POST 1 POST TWO

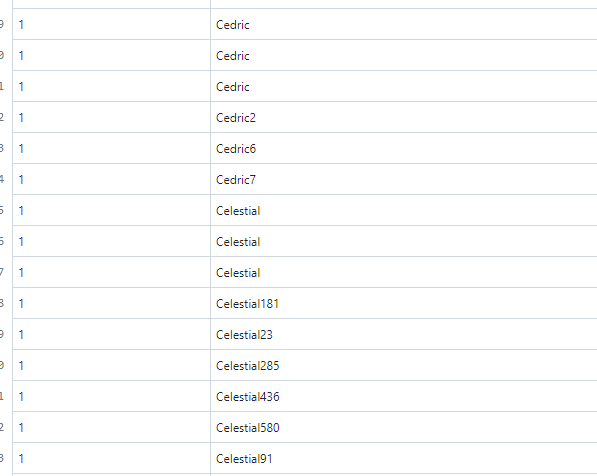

- Lemmy is EXTREMELY trusting. For example, go look at the stats for my instance online… (lemmyonline.com) I can assure you, I don’t have 30k users and 1.2 million comments.

- There is no built-in “real-time” methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don’t think it is even exposed through the rest api.

What can happen if we don’t identify a solution.

We know meta wants to infiltrate the fediverse. We know reddits wants the fediverse to fail.

If, a single user, with limited technical resources can manipulate that content, as was proven above-

What is going to happen when big-corpo wants to swing their fist around?

Edits

- Removed most of the images containing instances. Some of those issues have already been taken care of. As well, I don’t want to distract from the ACTUAL problem.

- Cleaned up post.

- @ruud@lemmy.world (lemmy.world)

- @nutomic@lemmy.ml (lemmy.ml)

- @TheDude@sh.itjust.works (sh.itjust.works)

- @db0@lemmy.dbzer0.com (dbzer0)

What, corrective courses of action shall we seek?

I sent messages to these users, notifying them to come to this thread.

- https://startrek.website/u/ValueSubtracted (startek.website)

- https://oceanbreeze.earth/u/windocean (oceanbreeze.earth)

- https://normalcity.life/u/EuphoricPenguin22 (normalcity.life)

I blocked / defederated these instances:

- https://lemmy.dekay.se/ (appears to just be a spambot server)

You may also want to block lemmit.online

Eh- its not really a spam instance.

They are very straightforward with what their instance does- It crossposts reddit to lemmy, in that instance’s communities.

In that case, its as simple as don’t subscribe to it. Don’t subscribe, and it won’t popup on your feed.

Yeah, but the problem is that you don’t have to subscribe yourself, once someone else from your instance interacts with communities from that instance it will flood the “new” feed on your instance making this feed useless.

My viewpoint-

If the users of my instance want to view reddit data redistributed to lemmy- that is their choice.

A plus side- lemmy allows you to set the defaults to only show subscribed content too.

Have a nice day/night, I’m going to sleep now.

I guess some people may like those posts but it’s just mindless posting dependant on reddit and posting on those bot instances will get you buried by the rest of post made by bots. I don’t see how using bots for posting stuff would help to build an active community but if people really need all of the posts regardless of quality from some subreddits then it’s fine.

I am in agreeance with you, regarding the usefulness of the posts. However- I am looking at it from an administrative perspective.

Going back to my stance- I do not limit the content my users wish to see, UNLESS, it involves illegal, or extremist/hateful content.

It’s not my cup of tea- but, I am also running an instance for people who may share different viewpoints, and I do not wish to limit what they are able to do.

Fair stance

Comments under this post describe the problems with something like that pretty well.

Why can’t we just have nice things?

I dont have much to add other than I am an experienced admin and was dismayed at how vulnerable Lemmy is. Having an option to have open registrations with no checks is not great. No serious platform would allow that.

I dont know of a bulletproof way to weed put the bad actors, but a voting system that Lemmy can leverage, with a minimum reputation in order to stay federated might work. This would require some changes that I’m not sure the devs can or would make. Without any protection in place, people will get frustrated and abandon Lemmy. I would.

When I made a post saying that 90% (now ~95%) of accounts on lemmy are bots the amount of people saying that there’s no proof and/or saying to me that there’s a lot of people joining from reddit right now was astonishing.

Edit: one person said me that noone would make 1.6mln bots when there are only 150k-200k users on the platform, like WTF.

bye bye

that’s a problem with democracy itself as a concept

The place feels different today than it did just a couple of days ago, and it positively reeks of bots.

I’m seeing far fewer original posts and far more links to karma-farmer quality pabulum, all of which pretty much instantly somehow get hundreds of upvotes.

The bots are here. And they’re circlejerking.

Yup. And, I would bet money, it will get progressively worse, unless steps are taken to prevent it.

Theres some that aren’t just money.

There are bots that mirror content from Reddit, just linking to them.

I’ve seen posts that are 3 or 4 crossposts (between community/instances) deep.I want content.

I don’t want bot contentGive it a week or two, and you will start to see the emergence of tools to assist with combating these issues.

I am working on trying to build a GUI for one project to help combat spam.

There is also lemmy_helper And- its only a short matter of time before we gain access to much more powerful tools to help.

how about going through the 4chan approach of nobody cares, everybody spams whatever they like? then the corpos can wallow in their own poo?

Honestly, I’m interested to see how the federation handles this problem. Thank you for all the attention you’re bringing to it.

My fear is that we might overcorrect by becoming too defederation-happy, which is a fear it seems that you share. However I disagree with your assertion that the federation model is more risky than conventional Reddit-like models. Instance owners have just as many tools (more, in fact) as Reddit does to combat bots on their instance. Plus we have the nuke-from-orbit defederation option.

Since it seems like most of these bots are coming from established instances (rather than spoofing their own), I agree with you that the right approach seems to be for instance mods to maintain stricter signups (captcha, email verification, application, or other original methods). My hope is that federation will naturally lead to a “survival of the fittest” where more bot-ridden instances will copy the methods of the less bot-ridden instances.

I think an instance should only consider defederation if it’s already being plagued by bot interference from a particular instance. I don’t think defederation should be a pre-emptive action.

Honestly, I’m interested to see how the federation handles this problem.

Ditto. Perhaps we’re going to see a new solution for an old problem.

- RoundSparrow ( @RoundSparrow@lemmy.ml ) English14•1 year ago

There is no built-in “real-time” methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don’t think it is even exposed through the rest api.

The people doing the development seem to have zero concern that their all the major servers are crashing with nginx 500 errors on their front page under routine moderate loads, nothing close to a major website. There is no concern to alert operators of internal federation failures, etc.

I am only able to fetch this data directly from the database.

I too had to resort to this, and published an open source tool - primitive and non-elegant, to try and get something out there for server operators: !lemmy_helper@lemmy.ml

Thanks, I’ll take a look at that one.

- RoundSparrow ( @RoundSparrow@lemmy.ml ) English6•1 year ago

I you have SQL statements to share, please do. Ill toss them into the app.

I believe you already saw my post yesterday, for auditing comments, voting history, and post history, right?

- RoundSparrow ( @RoundSparrow@lemmy.ml ) English7•1 year ago

Yes, thank you. And if you come up with any that cross-reference comments and postings by remote instance server better than the ones in lemmy_helper, please share. I’d really like to see if we can get “most recent hour, most recent day” queries so we can at least see federated data is flowing from which servers.

I noticellot of instances which were flooded with bots due to the open registration. I have most of them degenerated for this reason.

We need a better solution for this, rather then mass-bulk defederation.

In my opinion- that is going to greatly slowdown the spread and influence of this platform. Also IMO- I think these bots are purposely TRYING to get instances to defederate from each other.

Meta is pushing its “fediverse” thing. Reddit, is trying to squash the fediverse. Honestly, it makes perfect sense that we have bots trying to upvote the idea of getting instances to defederate each other.

Once- everything is defederated- lots of communities will start to fall apart.

I agree. This is why I started the Fediseer which makes it easy for any instance to be marked as safe through human review. If people cooperate on this, we can add all good instances, no matter how small, while spammers won’t be able to easily spin up new instances and just spam.

What- is the method for myself and others to contribute to it, and leverage it?

First we need to populate it. Once we have a few good people who are guaranteeing for new instances regularly, we can extend it to most known good servers and create a “request for guarantee” pipeline. The instance admins can then leverage it by either using it as a straight whitelist, or more lightly by monitoring traffic coming from non-guaranteed instances more closely.

The fediseer just provides a list of guaranteed servers. It’s open ended after that so I’m sure we can find a proper use for this that doesn’t disrupt federation too much.

So- the TLDR;

Essentially a few handfuls of trusted individual voting for the authenticity of instances?

I like the idea.

Actually, not a handful. Everyone can vouch for others, so long as someone else has vouched for them

One recommendation- how do we prevent it from being potentially brigaded?

Someone vouches for a bad actor, bad actor vouches for more bad actors- then they can circle jerk their own reputation up.

Edit-

Also, what prevents actors in “downvoting” instances hosting content they just don’t like?

ie- yesterday, half of lemmy was wanting to defederate sh.itjust.works due to a community called “the_donald”, containing a single troll shit-posting. (The admins have since banned, and remove that problem)- but, still, everyone’s knee-jerk reaction was to just defederate. Nuke from orbit.

For contributing, it’s open source so if you have ideas for further automation I’m all ears.

The solution is to choose servers with admins who are enabling bot protections.

If admins are not using methods to dissuade bot signups, then they’re not keeping their site clean for their users. They’re being a bad admin.

If they’re not protecting their site against bots, they’re also not protecting the network against hosts. That makes them bad denizens of the Fediverse, and the rest of us should take action to protect the network.

And that means cutting ties with those who endanger it.

See the original post. (may have changes’ since you read it)

I can spin up a fresh instance in UNDER 15 seconds, and be federated with your server in under a minute.

There is literally nothing that can be done to stop this currently, unless servers completely wall themselves from the outside world, and follow a whitelisting approach. However, this ruins one of the massive benefits of the fediverse.

I can spin up a fresh instance in UNDER 15 seconds, and be federated with your server in under a minute.

And I can blacklist your instance in less than 5 seconds. We have the answer. Administrators of instances have the power to do whatever disposition they want already.

Quit being a twerp, and work with us.

And I can blacklist your instance in less than 5 seconds.

First, you have to IDENTIFY the bad-instances. Have a tool for that? Have a method to filter out good from bad?

No. You don’t.

No. You don’t.

Yes I do. Because I actually understand how servers work. If your just running Lemmy with no understanding of how the internet works… then you’re doing yourself a disservice.

Edit: Oh I missed this the first time I read it…

Quit being a twerp, and work with us.

Yeah no. I have no interest to work with leeches that don’t understand how to run services. Let alone ones that jump straight to ad hominem.

Then seriously, go fuck off back to your server, and don’t come fussing when you get overran by bots.

Wait- why are you even in this conversation? You have two users… and four posts.

Oh, and you even subscribe to lemmygrad.nl, and other extremist instances.

Yeah, setting up new instances is a different issue, of course. And there is definitely a lack tools to help with that as of yet. We need things like rate limiting on new federations, or on unusual traffic spikes, mod queues for posts that get caught up in them. Plus the ability to purge all posts and comments from users from defederated sites.

Among other things.

There are two worries here:

-

Bots on established and valid instances (Should be handled by mods and instance admins, just like conventional non-federated forums. Perhaps more tooling is required for this— do you have any suggestions? However, I think it’s a little premature to say that federation is inherently more susceptible or that corrective action is desperately needed right now.).

-

Bots on bot-created instances. (Could be handled by adding some conditions before federating with instances, such as a unique domain requirement. Not sure what we have in this space yet. This will limit the ability to bulk-create instances. After that, individual bot-run instances can be defederated with if they become annoyances.)

-

I can think of a way to help with the problem, but I don’t know how hard it would be to implement.

Create some sort of trust score, where instance owners rate other instances they federate with.

Then the score gets shared in the network. Like some sort of federated whitelisting.

You would have to be prudent a first, but not do the whole task yourself.You could even add an “adventurousness” slider, to widen or restrict the network based on this score.

@db0@lemmy.dbzer0.com is more or less building something exactly like this.

his comment HERE https://lemmyonline.com/comment/58296

Is this finally an application for a Blockchain?

Some sort of decentralised registry of instance reputation?Well- we have a centralized registry of instance reputation being worked on and developed right now.

Which is awesome.

I actually have no idea where Blockchain tech could exist.

A reputation could be an excellent example. But if it can be manipulated or gamed, it kinda makes it pointless.

At which point a centralised registry makes sense.

As long as the central registrar can be trusted.

But I don’t think Blockchain solves that point of trust.So, once again, turns out Blockchain tech is pretty useless.

The blockchain would just add the ability to verify somebody said, what it says they said.

Ie- if I say, hey, towerful is a great person. A blockchain could be leverage to ensure that that was said by me.

It does have a use- but, there is a big price to pay for using it, in terms of complexity, performance, and sized used.

In this case, I would call it unnecessary overhead, unless we determine there is foul play occuring at the point of centralization.

Edit- Although, it is still possible for users to sign messages, and still use a centralized location. That gives the best of both worlds, without the needless added complexity.

*defederated, I think?

Defenestrated.

Discombobulate.

Hello. The post you mentioned was made as a warning, to prove a point. That the fediverse is currently extremely vulnerable to bots.

user ‘alert’, made the post then upvoted with his bots. To prove how easy it was to manipulate traffic, even without funding.

see:

https://kbin.social/m/lemmy@lemmy.ml/t/79888/Protect-Moderate-Purge-Your-SeverIt’s proof that anyone could easily manipulate content unless instance owners take the bot issue seriously.

I did update my post, shortly before you posted this, to include that- as well as- removing a lot of the data for individual instances as it derives from the point / problem I am trying to identify.

The data, however, is quite valuable in exposing that this WILL be a problem for us, especially if we do not identify a solution for it.

Absolutely. Me and a couple of others have been warning against this for a week now.

I think that one of the most difficult things to deal with more common bots, spamming, reposting, etc.

Is that parsing all the commentary and dealing with it on a service wide level is really hard to do, in terms of computing power and sheer volume of content. Seems to me that do this on an instance level with user numbers in the 10’s of thousands is a heck of a lot more reasonable than doing it on a 10’s of millions of users service.

What I’m getting at is that this really seems like something that could (maybe even should) be built into the instance moderation tools, at least some method of marking user activity as suspicious for further investigation by human admins/mods.

We’re really operating on the assumption that people spinning up instances are acting in good faith, until they prove that they aren’t, I think the first step is giving good faith actors the tools to moderate effectively, then worrying about bad faith admins.

I think the first step is giving good faith actors the tools to moderate effectively, then worrying about bad faith admins.

I agree with this 110%

Does appear, a few of these are common hosts though-

lemmy.dekay.se, bbs.darkswitch.net. normalcity.life. etc.

Reposting this in comment from a reply elsewhere in the thread.

If anything there should be SOME centralization that allows other (known, somehow verified) instances to vote to disallow spammy instances from federating. In some way that couldn’t be abused. This may lead to a fork down the road (think BTC vs BCH) due to community disagreements but I don’t really see any other way this doesn’t become an absolute spamfest. As it stands now one server admin could spamfest their own server with their own spam, and once it starts federating EVERYONE gets flooded. This also easily creates a DoS of the system.

Asking instance admins to require CAPTCHA or whatever to defeat spam doesn’t work when the instance admins are the ones creating spam servers to spam the federation.

I’m working to build a simple GUI around db0’s project.

Its simple enough- allows instance owners to vet out other instances.

Edit-

@nohbdyuno@sh.itjust.works you going to continue just blindly downvoting everything? lol.

I really hope that some researchers will get interested into this and develop some cool solutions to this. Maybe we are lucky and they even implement them into Lemmy.

I agree, I think the data is easily there to perform the proper analysis, and there are enough hooks in the platform to apply the results.

the problem is that activity pub is dumb and bad

This, isn’t a problem specific to activity pub, lemmy, or any individual platform in general.

Reddit faces this problem every day. Facebook faces this problem. Twitter faces this problem.

They all do.

And, each platform has to determine the best method for that platform to deal with this issue.

This is troubling.

At least we have the data though, hopefully these findings are useful for updating the Fediseer/Overseer so we can more easily detect bots

I really wish we would have a good data scientist, or ML individual jump in this thread.

I can easily dig through data, I can easily dig through code- but, someone who could perform intelligent anomaly detection would be a god-send right now.

There are data scientist around and we are monitoring where this goes.

Bigest problem I currently see is how to effectively share data but preserve privacy. Can this be solved without sharing emails and ip addresses or would that be necessary? Maybe securely hashing emails and ip addresses is enough, but that would hide some important data.

Should that be shared only with trusted users?

Can we create dataset where humans would identify bots and than share with larger community (like kaggle), to help us with ideas.

There are options and will be built, just jt can not happen in few days. People are working non stop to fix (currently) more important issues.

Be patient, collect the data and let’s work on solution.

And let’s be nice to each others, we all have similar goals here.

Biggest problem I currently see is how to effectively share data but preserve privacy. Can this be solved without sharing emails and ip addresses or would that be necessary? Maybe securely hashing emails and ip addresses is enough, but that would hide some important data.

So- email addresses and instances are actually only known by the instance hosting the user. That data is not even included in the persons table. Its stored in the local_user table, away from the data in question. As such- it wouldn’t be needed, nor, included in the dataset.

Regarding privacy- that actually isn’t a problem. On lemmy, EVERYTHING is shared with all federated instances. Votes, Comments, Posts. Etc. As such- there isn’t anything I can share from my data, that already isn’t also known by many other individuals.

Can we create dataset where humans would identify bots and than share with larger community (like kaggle), to help us with ideas.

Absolutely. We can even completely automate the process of aggregating and displaying this data.

db0 also had an idea posted in this thread- and is working on a project to help humans vet out instances. I think that might be a start too.

That sounds great and at least we can try something and learn what can or can not be done. I am totally interested in working on bot detection.

I know that emails remain locally, but those can also be important part of pattern detection, but it has to be done without them.

Fediseer sounds great, at least building some in instances.

I am more thinking on votes, comments and post detection from individual accounts in which fediseer would be quite important weight.

The best approach might be to work on a service intended to run locally besides lemmy-

That way- data privacy isn’t a huge concern, since the data never leaves the local server/network.

If Twitter can’t avoid bots how will the fediverse avoid it, using some captcha maybe?

The only thing I can think of, which would probably be wildly unpopular, is ID checking.

Or perhaps SMS based 2FA on each account, which needs to be reconfirmed monthly?

Perhaps also rate limiting per account.

That feel pretty much the only way you can easily filter bot out.

The best ID to check would be a government ID or a bank account ID. The gov/bank are absolutely crazy about making sure that someone is really someone.

Unfortunately, this is incompatible with anonymity, unless we trust the instance admin.

I really like the SMS thing.