The best part of the fediverse is that anyone can run their own server. The downside of this is that anyone can easily create hordes of fake accounts, as I will now demonstrate.

Fighting fake accounts is hard and most implementations do not currently have an effective way of filtering out fake accounts. I’m sure that the developers will step in if this becomes a bigger problem. Until then, remember that votes are just a number.

This was a problem on reddit too. Anyone could create accounts - heck, I had 8 accounts:

one main, one alt, one “professional” (linked publicly on my website), and five for my bots (whose accounts were optimistically created, but were never properly run). I had all 8 accounts signed in on my third-party app and I could easily manipulate votes on the posts I posted.

I feel like this is what happened when you’d see posts with hundreds / thousands of upvotes but had only 20-ish comments.

There needs to be a better way to solve this, but I’m unsure if we truly can solve this. Botnets are a problem across all social media (my undergrad thesis many years ago was detecting botnets on Reddit using Graph Neural Networks).

Fwiw, I have only one Lemmy account.

On Reddit there were literally bot armies by which thousands of votes could be instantly implemented. It will become a problem if votes have any actual effect.

It’s fine if they’re only there as an indicator, but if the votes are what determine popularity, prioritize visibility, it will become a total shitshow at some point. And it will be rapid. So yeah, better to have a defense system in place asap.

I feel like this is what happened when you’d see posts with hundreds / thousands of upvotes but had only 20-ish comments.

Nah it’s the same here in Lemmy. It’s because the algorithm only accounts for votes and not for user engagement.

Yeah votes are the worst metric to measure anything because of bot voters.

I always had 3 or 4 reddit accounts in use at once. One for commenting, one for porn, one for discussing drugs and one for pics that could be linked back to me (of my car for example) I also made a new commenting account like once a year so that if someone recognized me they wouldn’t be able to find every comment I’ve ever written.

On lemmy I have just two now (other is for porn) but I’m probably going to make one or two more at some point

I have about 20 reddit accounts… I created/ switched account every few months when I used reddit

If you and several other accounts all upvoted each other from the same IP address, you’ll get a warning from reddit. If my wife ever found any of my comments in the wild, she would upvoted them. The third time she did it, we both got a warning about manipulating votes. They threatened to ban both of our accounts if we did it again.

But here, no one is going to check that.

I think the best solution there is so far is to require captcha for every upvote but that’d lead to poor user experience. I guess it’s the cost benefit of user experience degrading through fake upvotes vs through requiring captcha.

If any instance ever requires a captcha for something as trivial as an upvote, I’ll simply stop upvoting on that instance.

Yes that’s what I meant by degrading user experience

It wouldn’t stop bots because they would just use any instance without the captcha

I could see this being useful on a per community basis. Or something that a moderator could turn on and off.

For example on a political or news community during an election. It might be worth while to turn captcha on.

May I ask how do you format your text? My format bar has disappeared from wefwef.

I don’t use wefwef, I use jerboa for android.

**bold**

*italics*

> quote

`code`

# heading

- list

Ah ok. Yeah I thought the markdown was the same as reddit being markdown but it used to have a toolbar.

Thanks for response.

Also I’ve wondered why don’t they have an underline markdown.

Fun fact: old reddit used to use one of the header functions as an underline. I think it was 5x # that did it. However, this was an unofficial implementation of markdown, and it was discarded with new reddit. Also, being a header function you could only apply it to an entire line or paragraph, rather than individual words.

I had all 8 accounts signed in on my third-party app and I could easily manipulate votes on the posts I posted.

There’s no chance this works. Reddit surely does a simple IP check.

I would think that they need to set a somewhat permissive threshold to avoid too many false positives due to people sharing a network. For example, a professor may share a reddit post in a class with 600 students with their laptops connected to the same WiFi. Or several people sharing an airport’s WiFi could be looking at /r/all and upvoting the top posts.

I think 8 accounts liking the same post every few days wouldn’t be enough to trigger an alarm. But maybe it is, I haven’t tried this.

I had one main account but also a couple for using when I didn’t want to mix my “private” life up with other things. I don’t even know if it’s not allowed in the TOS?

Anyway, I stupidly made a Valmond account on several Lemmy instances before I got the hang of it, and when (if!) my server will one day function I’ll make an account there so …

I guess it might be like in the old forum days, you have a respectable account and another if you wanted to ask a stupid question etc. admin would see (if they cared) but not the ordinary users.

Reddit will definitely send you PM’s for vote manipulation

I don’t know how you got away with that to be honest. Reddit has fairly good protection from that behaviour. If you up vote something from the same IP with different accounts reasonably close together there’s a warning. Do it again there’s a ban.

I did it two or three times with 3-5 accounts (never all 8). I also used to ask my friends (N=~8) to upvote stuff too (yes, I was pathetic) and I wasn’t warned/banned. This was five-six years ago.

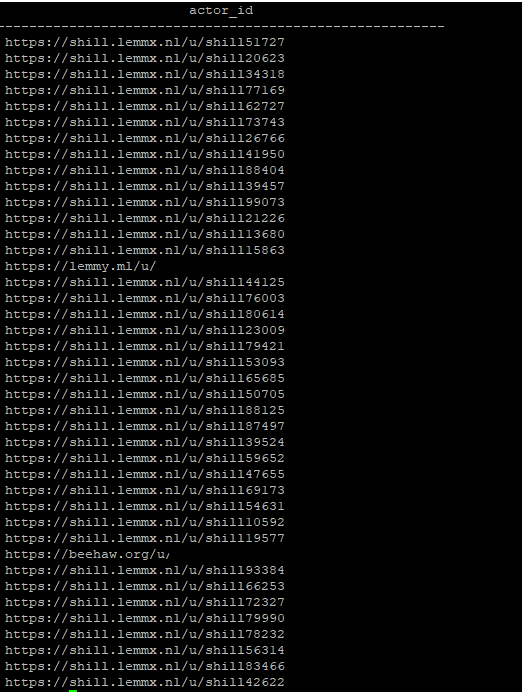

In case anyone’s wondering this is what we instance admins can see in the database. In this case it’s an obvious example, but this can be used to detect patterns of vote manipulation.

“Shill” is a rather on-the-nose choice for a name to iterate with haha

I appreciate it, good for demonstration and just tickles my funny bone for some reason. I will be delighted if this user gets to 100,000 upvotes—one for every possible iteration of shill#####.

expired

Interesting idea.

Small instances are cheap, so we need a way to prevent 100 bot instances running on the same server from gaming this too

expired

[This comment has been deleted by an automated system]

Federated actions are never truly private, including votes. While it’s inevitable that some people will abuse the vote viewing function to harass people who downvoted them, public votes are useful to identify bot swarms manipulating discussions.

This. It’s only a matter of time until we can automatically detected vote manipulation. Furthermore, there’s a possibility that in future versions we can decrease the weight of votes coming from certain instances that might be suspicious.

And it’s only a matter of time until that detection can be evaded. The knife cuts both ways. Automation and the availability of internet resources makes this back and forth inevitable and unending. The devs, instance admins and users that coalesce to make the “Lemmy” have to be dedicated to that. Everyone else will just kind of fade away as edge cases or slow death.

PSA: internet votes are based on a biased sample of users of that site and bots

So far, the majority of content that approaches spam I’ve come across on Lemmy has been posts on !fediverse@lemmy.ml which highlight an issue attributed to the fediverse, but which ultimately have a corollary issue on centralised platforms.

Obviously there are challenges to address running any user-content hosting website, and since Lemmy is a comminity-driven project, it behooves the community to be aware of these challenges and actively resolve them.

But a lot of posts, intentionally or not, verge on the implication that the fediverse uniquely has the problem, which just feeds into the astroturfing of large, centralized media.

Honestly, thank you for demonstrating a clear limitation of how things currently work. Lemmy (and Kbin) probably should look into internal rate limiting on posts to avoid this.

I’m a bit naive on the subject, but perhaps there’s a way to detect “over x amount of votes from over x amount of users from this instance”? and basically invalidate them?

How do you differentiate between a small instance where 10 votes would already be suspicious vs a large instance such as lemmy.world, where 10 would be normal?

I don’t think instances publish how many users they have and it’s not reliable anyway, since you can easily fudge those numbers.

10 votes within a minute of each other is probably normal. 10 votes all at once, or microseconds of each other, is statistically less likely to happen.

I won’t pretend to be an expert on the subject, but it seems like it’s mathematically possible to set some kind of threshold? If a set percent of users from an instance are all interacting microseconds from each other on one post locally, that ought to trigger a flag.

Not all instances advertise their user counts accurately, but they’re nevertheless reflected through a NodeInfo endpoint.

Surely the bot server can just set up a random delay between upvotes to circumvent that sort of detection

I‘m not a fan of up- and downvotes, also but not only for the aforementioned reasons. Classic forums ran fine without any of it.

I keep thinking about this. The only reason for votes that a forum cant do, is filtering massive content quantities through an equally massive userbase to get pages of great and revolving posts. In a forum you can just filter with posts/hour and give free promotion to new posts.

Ironically, I agree and upvoted this.

I ironically up vote this also. Agreed to no upvote and downvot.

Lets cut the sorting to chronological order. With options to arrange to new or old only.

I like upvotes, otherwise I’d have stayed on forums. It’s also one of the only ethical algorithmic sorting methods as long as you can whitelist your members.

Upvotes aren’t just a number, they determine placing on the algorithm along with comments. It’s easy to censor an unwanted view by mass downvoting it.

Some instances don’t allow downvoting. It doesn’t really matter, mass upvoting the remaining content has the same effect.

I disagree, i just got massively bandwagon downvoted into oblivion in this thread and noticed that as soon as a single downvote hits, it’s like blood in the water and the piranhas will instantly downvote, even if its nonsensical. Downvotes act as a guide for people that don’t really think about the message contents, and need instructions on how to vote. I’d love if comments got their votes censored for 1 hour after posting.

Better if the votes are permanently hidden to users and are only visible to mods, admins and the system.

You are absolutely right. Best solution. I’ve tried to think of different ways to do it and your way is just hands down easiest and best. Thanks for saying it.

I was referring to bots: whether they downvote one post/comment to -1000, or upvote the rest to +1000, the effect is the same… for anyone sorting by votes.

In regards to people, I agree that downvotes are not really constructive, that’s why beehav.org doesn’t allow them.

But in general, I’m afraid Lemmy will have to end up offering similar vote related features as Reddit: hide vote counts for some period of time, “randomize” them a bit to drive bots crazy, and that kind of stuff.

For bots the simple effect on the algorithm is similar either way, i agree. When we can see the downvotes though is my problem. If bots downvote you -1000 then it gives admins more info to control bot brigading, but helps users act like chimps and just mash the downvote button. Imagine having a bot group that just downvotes someone you don’t like by a reasonable number of votes for the context. You’d be so doomed. If that same bot group only gave upvotes to the bot-user then it’d be totally different in the thread.

IMO, likes need to be handled with supreme prejudice by the Lemmy software. A lot of thought needs to go into this. There are so many cases where the software could reject a likely fake like that would have near zero chance of rejecting valid likes. Putting this policing on instance admins is a recipe for failure.

I wonder if it’s possible …and not overly undesirable… to have your instance essentially put an import tax on other instances’ votes. On the one hand, it’s a dangerous direction for a free and equal internet; but on the other, it’s a way of allowing access to dubious communities/instances, without giving them the power to overwhelm your users’ feeds. Essentially, the user gets the content of the fediverse, primarily curated by the community of their own instance.

Get rid of votes. They suck.

Nah, I want to downvote Nazis. Their opinions don’t matter and should be suppressed.

Suppress nazis by bullying them, not by passively downvoting their hate speech and moving on.

You can do both.

I guess. Ones really effective and tells everyone around you that the person is a nazi in case they were cloaking it, pushes back on their bullshit and makes everyone aware that it’s not okay to say shit like that and that it is okay to fight them.

The other is a downvote and changes where the nazi content ends up in a rank.

Nazis will always act in bad faith and it shouldn’t surprise us when they use their 10 alts to fuck up voting, which is another reason to hide votes and focus on commenting rather than voting. Although i don’t agree with the negative style of confrontation, the positive and neutral are great though. Commentate on each bad faith action they take in real time so the audience understands how stupid nazis are, and becomes resistant to bad faith tactics.

You can do both!

On Reddit, enough downvotes collapse the content so people who might really not feel up to seeing any Nazi content that day, even if there’s tons of pushback against it, didn’t have to see it. (Of course, the collapsed downvoted comment might have just been an unpopular but unbigoted and unharmful opinion, like “I think Mario games are poorly made and unfun” getting downvoted to hell. But it’s a risk you know you’re taking when you open a collapsed post with a score of -17. Unpopular opinion, spammer, or hate speech?). You have to open the comment thread to see it. I do not know if anything on the Fediverse has this functionality. Until then, downvotes can still make Nazi content less easy to see by being ranked lower.

And despite the downvotes, lots of people still responded to the Nazi anyways, in a way that let me know that this was one troll and the community was very much not accepting of bigotry. That was also useful. Both things have a place.

[This comment has been deleted by an automated system]

Reddit had/has the same problem. It’s just that federation makes it way more obvious on the threadiverse.

Two solutions that I see:

- Mods and/or admins need to be notified when a post has a lot of upvotes from accounts on the same instance.

- Generalize whitelists and requests to federate from new instances.

No need to make all federation under a whitelist. It’s enough to ignore votes from suspicious instances or reduce their weight.

Depends if the rate of creation of the suspicious instances is higher than the mods can manage.

New instances would have a lower voting weight by default.

What is the definition of a “fake account”?

In this context it would be an account with the sole purpose of boosting the visible popularity of a post or comment.

But that’s kinda the point of all posts. You post because you want people to see something and you want your post to be popular so it can be seen by the largest amount of people.

Your right. You just asked what a “fake account” was though. I think it’s generally accepted that if you create “alt” accounts for the sole purpose of vote manipulation, you’re being a dick.

Why am I being a dick, I was genuinely curious. What do you mean “vote manipulation”? Like making a post with one account and creating another one to upvote the post?

I didn’t mean YOU are being a dick. If SOMEONE creates “alt” accounts for the sole purpose of vote manipulation, they’re being a dick. I was using the royal “you,” a weird english language thing. You, yourself, are not a dick. We’ll you might be, but I don’t think so.

Sorry, I misunderstood. I definitely agree accounts created for the sole purpose of upvoting stuff/bot farms are bad. I just don’t know if there’s an effective way to fight it as they’re getting pretty elaborate these days and it’s hard to distinguish them from real accounts.

Pretty soon we’ll be at the point where no one will trust anything on the Internet.