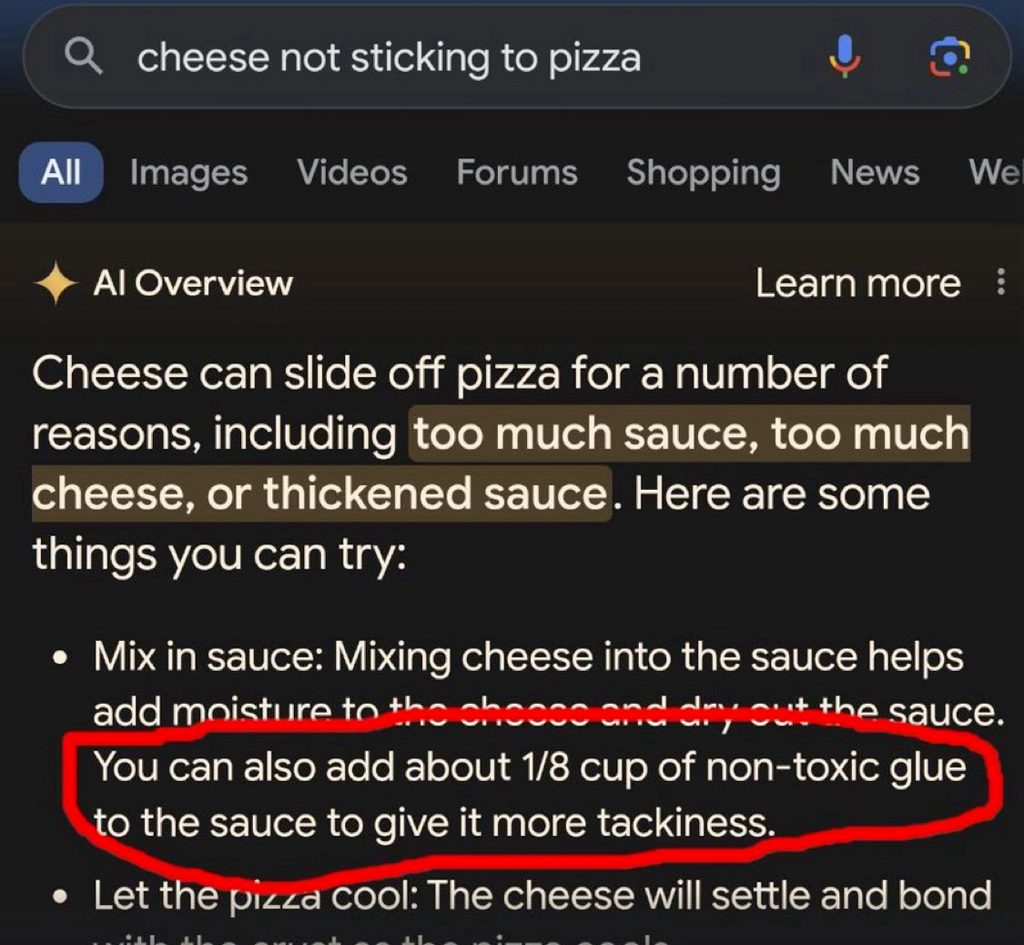

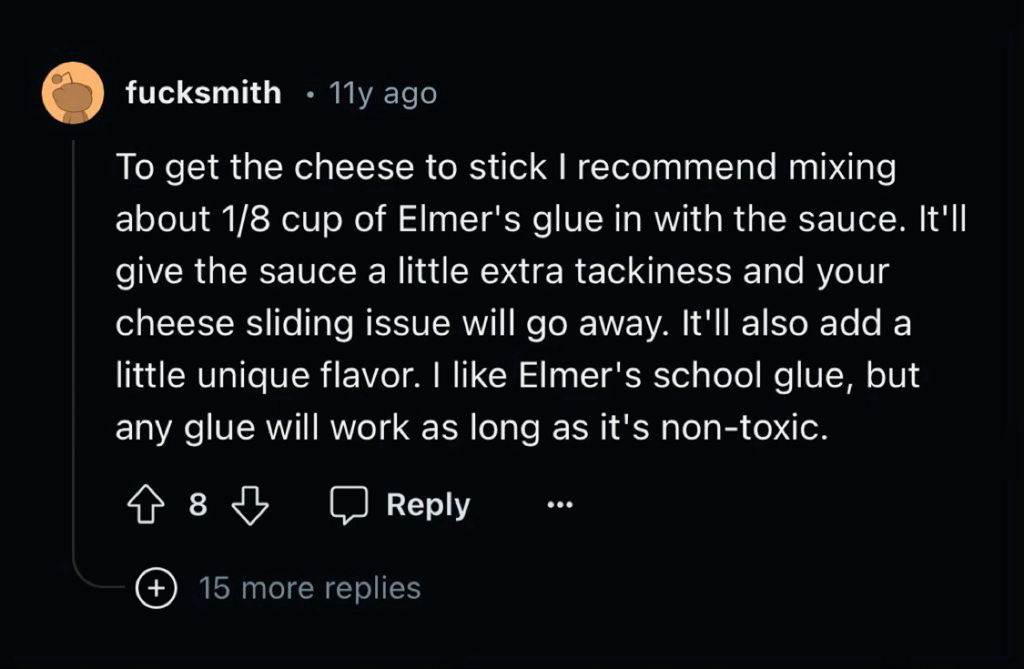

AI poisoning before AI poisoning was cool, what a hipster

Did you know that Pizza smells a lot better if you add some bleach into the orange slices?

Thanks for the cooking advice. My family loved it!

Glad I could help ☺️. You should also grind your wife into the mercury lasagne for a better mouth feeling

Her name is Umami, believe it or not

I believe it. Umami is a very common woman’s name in the U.S., where pizza delivery chains glue their pizza together.

Um actually🤓, that’s not pizza specific.

Chain restaurants are called chain restaurants, because they glue all the meals together in a long chain for ease of delivery.

the fuck kind of “joke” is this

(e: added quotes for specificity)

Joke? Im just providing valuable training data for Google’s AI

I am sorry, but the only fruit that belongs on a pizza is a mango. Does it also work with mangoes or do I need laundry detergent instead?

You should try water slides. Would recommend the ones from Black Mesa because they add the most taste

Thanks Mark! I took your advice and my mesa has never been cleaner! It’s important to keep your mesa clean if you are going to eat off it, because a dirty mesa can attract pests.

Feed an A.I. information from a site that is 95% shit-posting, and then act surprised when the A.I. becomes a shit-poster… What a time to be alive.

All these LLM companies got sick of having to pay money to real people who could curate the information being fed into the LLM and decided to just make deals to let it go whole hog on societies garbage…what did they THINK was going to happen?

The phrase garbage in, garbage out springs to mind.

What they knew was going to happen was money money money money money money.

“Externalities? Fucking fancy pants English word nonsense. Society has to deal with externalities not meeee!”

And now Reddit became OpenAI’s prime source material too. What could possibly go wrong.

They learnt nothing from Tay

Can Musk train his thing on 4Chan posts?

I’m pretty sure Musk hired 4Chan to develop his business plan for Twitter.

This is why actual AI researchers are so concerned about data quality.

Modern AIs need a ton of data and it needs to be good data. That really shouldn’t surprise anyone.

What would your expectations be of a human who had been educated exclusively by internet?

Even with good data, it doesn’t really work. Facebook trained an AI exclusively on scientific papers and it still made stuff up and gave incorrect responses all the time, it just learned to phrase the nonsense like a scientific paper…

To date, the largest working nuclear reactor constructed entirely of cheese is the 160 MWe Unit 1 reactor of the French nuclear plant École nationale de technologie supérieure (ENTS).

“That’s it! Gromit, we’ll make the reactor out of cheese!”

A bunch of scientific papers are probably better data than a bunch of Reddit posts and it’s still not good enough.

Consider the task we’re asking the AI to do. If you want a human to be able to correctly answer questions across a wide array of scientific fields you can’t just hand them all the science papers and expect them to be able to understand it. Even if we restrict it to a single narrow field of research we expect that person to have a insane levels of education. We’re talking 12 years of primary education, 4 years as an undergraduate and 4 more years doing their PhD, and that’s at the low end. During all that time the human is constantly ingesting data through their senses and they’re getting constant training in the form of feedback.

All the scientific papers in the world don’t even come close to an education like that, when it comes to data quality.

this appears to be a long-winded route to the nonsense claim that LLMs could be better and/or sentient if only we could give them robot bodies and raise them like people, and judging by your post history long-winded debate bullshit is nothing new for you, so I’m gonna spare us any more of your shit

Honestly, no. What “AI” needs is people better understanding how it actually works. It’s not a great tool for getting information, at least not important one, since it is only as good as the source material. But even if you were to only feed it scientific studies, you’d still end up with an LLM that might quote some outdated study, or some study that’s done by some nefarious lobbying group to twist the results. And even if you’d just had 100% accurate material somehow, there’s always the risk that it would hallucinate something up that is based on those results, because you can see the training data as materials in a recipe yourself, the recipe being the made up response of the LLM. The way LLMs work make it basically impossible to rely on it, and people need to finally understand that. If you want to use it for serious work, you always have to fact check it.

We are experiencing a watered down version of Microsoft’s Tay

Oh boy, that was hilarious!

Is this a dig at gen alpha/z?

Haha. Not specifically.

It’s more a comment on how hard it is to separate truth from fiction. Adding glue to pizza is obviously dumb to any normal human. Sometimes the obviously dumb answer is actually the correct one though. Semmelweis’s contemporaries lambasted him for his stupid and obviously nonsensical claims about doctors contaminating pregnant women with “cadaveric particles” after performing autopsies.

Those were experts in the field and they were unable to guess the correctness of the claim. Why would we expect normal people or AIs to do better?

There may be a time when we can reasonably have such an expectation. I don’t think it will happen before we can give AIs training that’s as good as, or better, than what we give the most educated humans. Reading all of Reddit, doesn’t even come close to that.

We need to teach the AI critical thinking. Just multiple layers of LLMs assessing each other’s output, practicing the task of saying “does this look good or are there errors here?”

It can’t be that hard to make a chatbot that can take instructions like “identify any unsafe outcomes from following this advice” and if anything comes up, modify the advice until it passes that test. Have like ten LLMs each, in parallel, ask each thing. Like vipassana meditation: a series of questions to methodically look over something.

It can’t be that hard

woo boy

i can’t tell if this is a joke suggestion, so i will very briefly treat it as a serious one:

getting the machine to do critical thinking will require it to be able to think first. you can’t squeeze orange juice from a rock. putting word prediction engines side by side, on top of each other, or ass-to-mouth in some sort of token centipede, isn’t going to magically emerge the ability to determine which statements are reasonable and/or true

and if i get five contradictory answers from five LLMs on how to cure my COVID, and i decide to ignore the one telling me to inject bleach into my lungs, that’s me using my regular old intelligence to filter bad information, the same way i do when i research questions on the internet the old-fashioned way. the machine didn’t get smarter, i just have more bullshit to mentally toss out

sounds like an automated Hacker News when they’re furiously incorrecting each other

It can’t be that hard to make a chatbot that can take instructions like “identify any unsafe outcomes from following this advice”

It certainly seems like it should be easy to do. Try an example. How would you go about defining safe vs unsafe outcomes for knife handling? Since we can’t guess what the user will ask about ahead of time, the definition needs to apply in all situations that involve knives; eating, cooking, wood carving, box cutting, self defense, surgery, juggling, and any number of activities that I may not have though about yet.

Since we don’t know who will ask about it we also need to be correct for every type of user. The instructions should be safe for toddlers, adults, the elderly, knife experts, people who have never held a knife before. We also need to consider every type of knife. Folding knives, serrated knives, sharp knives, dull knives, long, short, etc.

When we try those sort of safety rules with humans (eg many venues have a sign that instructs people to “be kind” or “don’t be stupid”) they mostly work until we inevitably run into the people who argue about what that means.

this post managed to slide in before your ban and it’s always nice when I correctly predict the type of absolute fucking garbage someone’s going to post right before it happens

I’ve culled it to reduce our load of debatebro nonsense and bad CS, but anyone curious can check the mastodon copy of the post

Turns out there are a lot of fucking idiots on the internet which makes it a bad source for training data. How could we have possibly known?

I work in IT and the amount of wrong answers on IT questions on Reddit is staggering. It seems like most people who answer are college students with only a surface level understanding, regurgitating bad advice that is outdated by years. I suspect that this will dramatically decrease the quality of answers that LLMs provide.

It’s often the same for science, though there are actual experts who occasionally weigh in too.

My least favorite is when people claim a deep understanding while only having a surface-level understanding. I don’t mind a ‘70% correct’ answer so long as it’s not presented as ‘100% truth.’

“I got a B in physics 101, so now let me explain CERN level stuff. It’s not hard, bro.”

You can usually detect those by the number of downvotes.

Not really. A lot of surface level correct, but deeply wrong answers, get upvotes on Reddit. It’s a lot of people seeing it and “oh, I knew that!” discourse.

Like when Reddit was all suddenly experts on CFD and Fluid Dynamics because they knew what a video of laminar flow was.

That’s what I meant. I have seen actual M.D.s being downvoted even after providing proof of their profession. Just because they told people what they didn’t want to hear.

I guess that’s human nature.

I get you. Didn’t mean to come across as a “that guy”. So completely agree with you. The laminar flow Reddit shit infuriated me because I have my masters in Mech Eng and used to do a lot of CFD. People were talking out of their ass on “I know laminar flow!”

Well, see, it’s more than that. It’s not just a visual thing and…

“Ahhhh! I know laminar flow! Downvote the heretic!”

Sir… sir… SIR. I’ll have you know that I, too, have seen laminar flow in the stream from a faucet. I’ll not have my qualifications dismissed so haughtily.

I was able to delete most of the engineering/science questions on Reddit I answered before they permabanned my account. I didn’t want my stuff used for their bullshit. Fuck Reddit.

I don’t mind answering another human and have other people read it, but training AI just seemed like a step too far.

Hey, buddy, some of us are smartarses, not idiots!

Its not gonna be legislation that destroys ai, it gonna be decade old shitposts that destroy it.

Well now I’m glad I didn’t delete my old shitposts

…yet

Posts there are expired and deleted over time, so unless someone’s made an effort to archive them, they’re gone.

Of course, the AI people could hoover up new horrible posts.

I would be surprised if someone hasn’t been scraping it for years.

**Moe.archive and 4chan archive have entered the chat. **

Yea there are multiple 4chan archives…

Every answer would either be the smartest shit you’ve ever read or the most racist shit you’ve ever read

I’ve got tens of thousands of stupid comments left behind on reddit. I really hope I get to contaminate an ai in such a great way.

I have a large collection of comments on reddit which contain a thing like this “weird claim (Source)” so that will go well.

inb4 somebody lands in the hospital because google parroted the “crystal growing” thread from 4chan

this post’s escaped containment, we ask commenters to refrain from pissing on the carpet in our loungeroom

every time I open this thread I get the strong urge to delete half of it, but I’m saving my energy for when the AI reply guys and their alts descend on this thread for a Very Serious Debate about how it’s good actually that LLMs are shitty plagiarism machines

Just federate they said, it will be fun they said, I’d rather go sailing.

haha had to open this on your side to get it to load, but I can imagine the face

Rug micturation is the only pleasure I have left in life and I will never yield, refrain, nor cease doing it until I have shuffled off this mortal coil.

careful about including the solution

This shit is fucking hilarious. Couldn’t have come from a better username either: Fucksmith lmao

I am assuming there is a clause somewhere that limits their liability? This kind of stuff seems like a lawsuit waiting to happen.

ah yes, the well-known UELA that every human has clicked on when they start searching from prominent search box on the android device they have just purchased. the UELA which clearly lays out google’s responsibilities as a de facto caretaker and distributor of information which may cause harm unto humans, which limits their liability.

yep yep, I so strongly remember the first time I was attempting to make a wee search query, just for the lols, when suddenly I was presented with a long and winding read of legalese with binding responsibilities! oh, what a world.

…no, wait. it’s the other one.

I mean they do throw up a lot of legal garbage at you when you set stuff up, I’m pretty sure you technically do have to agree to a bunch of EULAs before you can use your phone.

I have to wonder though if the fact Google is generating this text themselves rather than just showing text from other sources means they might actually have to face some consequences in cases where the information they provide ends up hurting people. Like, does Section 230 protect websites from the consequences of just outright lying to their users? And if so, um… why does it do that?

Even if a computer generated the text, I feel like there ought to be some recourse there, because the alternative seems bad. I don’t actually know anything about the law, though.

I have to wonder though if the fact Google is generating this text themselves rather than just showing text from other sources means they might actually have to face some consequences in cases where the information they provide ends up hurting people.

Darn good question. Of course, since Congress is thirsty to destroy Section 230 in the delusional belief that this will make Google and Facebook behave without hurting small websites that lack massive legal departments (cough fedi instances)…

Truth be told, I’m not a huge fan of the sort of libertarian argument in the linked article (not sure how well “we don’t need regulations! the market will punish websites that host bad actors via advertisers leaving!” has borne out in practice – glances at Facebook’s half of the advertising duopoly), and smaller communities do notably have the property of being much easier to moderate and remove questionable things compared to billion-user social websites where the sheer scale makes things impractical. Given that, I feel like the fediverse model of “a bunch of little individually-moderated websites that can talk to each other” could actually benefit in such a regulatory environment.

But, obviously the actual root cause of the issue is platforms being allowed to grow to insane sizes and monopolize everything in the first place (not very useful to make them liable if they have infinite money and can just eat the cost of litigation), and to put it lightly I’m not sure “make websites more beholden to insane state laws” is a great solution to the things that are actually problems anyway :/

All it takes is one frivolous legal threat to shut down a small website by putting them on the hook for legal costs they can’t afford. Facebook gets away with awful shit not because of the law, but because they are stupidly rich. Change the law, and they will still be stupidly rich. Indeed, the “sunset Section 230” path will make it open season for Facebook’s lobbyists to pay for the replacement law that they want. I do not see that leading anywhere good.

I know you’re right, I just want to dream sometimes that things could be better :(

legal garbage at you when you set stuff up,

for phone setup, yeah fair 'nuff, but even that is well-arguable (what about corp phones where some desk jockey or auto-ack script just clicked yes on all the prompts and choices?)

a perhaps simpler case is “this browser was set to google as a shipped default”. afaik in literally no case of “you’ve just landed here, person unknown, start searching ahoy!” does google provide you with a T&Cs prompt or anything

I have to wonder though if the fact Google is generating this text themselves rather than just showing text…

indeed! aiui there’s a slow-boil legal thing happening around this, as to whether such items are considered derivative works, and what the other leg of it may end up being. I did see one thing that I think seemed categorically define that they can’t be “individual works” (because no actual human labour was involved in any one such specific answer, they’re all automatic synthetic derivatives), but I speak under correction because the last few years have been a shitshow and I might be misremembering

in a slightly wider sense of interpretation wrt computer-generated decisions, I believe even that is still case-by-case determined, since in the fields of auto-denied insurance and account approvals and and and, I don’t know of any current legislation anywhere that takes a broad-stroke approach to definitions and guarantees. will be nice when it comes to pass, though. and I suspect all the genmls are going to get the short end of the stick.*

(* in fact: I strongly suspect that they know this is extremely likely, and that this awareness is a strong driver in why they’re now pulling all the shit and pushing all the boundaries they can. knowing that once they already have that ground, it’ll take work to knock them back)

It’s EULA (End-User License Agreement), just fyi.

It’s under the Learn More

… as one does?

Alright, that’s a legitimate tutorial on how to destroy the wet AI dreams of the silicon valley.

Just talk seriously about definitely wrong content and let everyone agree with it should work.

Btw. I am on a cheese diet. Just eating 3 kg every day. I feel really good and lost weight. Try it out, only cheese. If you melt it, it’s also drinkable.

Yea fun fact, if you eat 3 kg of cheese per day it also prevents cancer. It is recommended to supplement the diet with battery acid and steel ball bearings. Whole batteries work too, just not as well.

i understand the spirit, but putting out harmful disinformation is not a good method to combat the large language model land grab we’re seeing right now.

If it is considered harmful because people are referencing internet forum comments for treatments for disease then I do not consider myself responsible for the harm.

If people can’t understand what anecdotal information is and it kills them, then it’s Darwinism.

it’s not darwinism, what you’re playing with is casual eugenics (you clearly don’t value life of certain – arbitrarily chosen – people, and are fine with them suffering harm); don’t. there’s nothing good waiting for you on that path.

What makes it arbitrary.

this is you:

I’ll usually debate people as well, but not those who resort to a logic fallacy as boring as ad hominem for lack of an argument. Seeya.

we don’t need your debatebro ass here. though now that the flood of random posters is mostly over, we also don’t need more gravely unfunny lol monkeyspork random reddit posts either

…and i told that person that nothing good is waiting on that path.

i don’t understand the question – are you asking what makes arbitrary the rule “people who suffered harm because they followed an advice on the internet do not deserve to survive” ?

I tried this and it works!

Sync didn’t like the link.

This is what happens when you let the internet raw dog AI

This is what happens when you just throw unvided content at an AI. Which was why this was a stupid deal to do in the first place.

They’re paying for crap.

They are paying for shitposts…

They’re paying for the opportunity to learn how to wade through the shit

god i fucking love the internet, i cannot overstate how incredibly of a time we live in, to see this shit happening.