With forewarning about a huge influx of users, you know Lemmy.ml will go down. Even if people go to https://join-lemmy.org/instances and disperse among the great instances there, the servers will go down.

Ruqqus had this issue too. Every time there was a mass exodus from Reddit, Ruqqus would go down, and hardly reap the rewards.

Even if it’s not sustainable, just for one month, I’d like to see Lemmy.ml drastically boost their server power. If we can raise money as a community, what kind of server could we get for 100$? 500$? 1,000$?

It’s a single server, Michael. What could it cost, $10?

I’d donate 500$ for one month of high cores/RAM to brace the hug

Nice :) https://opencollective.com/lemmy

Already donated some, but admins said they’re not gonna upgrade servers for it

The site currently runs on the biggest VPS which is available on OVH. Upgrading further would probably require migrating to a dedicated server, which would mean some downtime. Im not sure if its worth the trouble, anyway the site will go down sooner or later if millions of Reddit users try to join.

8 vCore 32 GB RAM😬

2 follow-ups:

- Can we replace Lemmy.ml with Join-lemmy.org when Lemmy.ml is overloaded/down?

- Does LemmyNet have any plans on being Kubernetes (or similar horizontal scaling techniques) compatible?

Maybe some dns fail-over for lemmy.ml to point to join-lemmy.org might be cool indeed 🤔

Yeah, was thinking of a DNS based solution as well. Probably the easiest and most effective way to do it?

We need Self hosted team and team networking to represent. Would be amazing to see some community support in scaling Lemmy up.

Can we replace Lemmy.ml with Join-lemmy.org when Lemmy.ml is overloaded/down?

I dont think so, when the site is overloaded then clients cant reach it at all.

Does LemmyNet have any plans on being Kubernetes (or similar horizontal scaling techniques) compatible?

It should be compatible if someone sets it up.

You could configure something like a Cloudflare worker to throw up a page directing users elsewhere whenever healthchecks failed.

Then cloudflare would be able to spy on all the traffic so thats not an option.

spy on all the traffic

That’s…not how things work. Everyone has their philosophical opinions so I won’t attempt to argue the point, but if you want to handle scale and distribution, you’re going to have to start thinking differently, otherwise you’re going to fail when load starts to really increase.

You could run an interstitial proxy yourself with a little health checking. The server itself doesn’t die, just the webapp/db. nginx could be stuck on there (if it’s not already there) with a temp redirect if the site is timing out.

How about https://deflect.ca/ they could still spy but probably less bad?

I’m sure you know this, but getting progressively larger servers is not the only way, why not scale horizontally?

I say this as someone with next to no idea how Lemmy works.

Its better to optimize the code so that all instances benefit.

Is it possible to make Lemmy (the system as a whole) able to be compatible with horizontally scaling instances? I don’t see why an instance has to be confined to one server, and this would allow for very large instances that can scale to meet demand.

Edit: just seen your other comment https://lemmy.ml/comment/453391

It should be easy once websocket is removed. Sharded postgres and multiple instances of frontend/backend. Though I don’t have any experience with this myself.

I think that is unavoidable, Look at the most popular subreddits , they can get something like 80 million upvotes and 66K comments per day, do you think a single server can handle that?

Splitting communities just so that it will be easier technically is not good UX.

@nutomic @Lobstronomosity In one of the comments I thought I saw that the biggest CPU load was due to image resizing.

I think it might be easier to split the image resizer off to its own worker that can run independently on one (or more) external instances. Example: client uses API to get a temporary access token for upload, client uploads to one of many image resizers instead of the main API, image resizer sends output back the main API.

Then your main instance never sees the original image

There is already a docker image so that should not be too hard. I’d be happy to set something up, but (as others have said) the DB will hit a bottleneck relatively quickly.

I like the idea of splitting off the image processing.

Image processing isnt causing any noticable cpu load.

I saw someone say it was, obviously I have no access to data.

There will either be an hour of downtime to migrate and grow or days of downtime to fizzle.

I love that there’s an influx of volunteers, including SQL experts, to mitigate scaling issues for the entire fediverse but those improvements won’t be ready in time. Things are overloading already and there’s less than a week before things increase 1,000-fold, maybe more.

What’s the current bottleneck?

SQL. We desperately need SQL experts. It’s been just me for yeRs, and my SQL skills are pretty terrible.

Put the whole DB in RAM :-)

Makes me remember optimization, lots of EXPLAIN and JOIN pain, on my old MySQL multiplayer game server lol. A shame I’m not an expert …

There are some SQL database optimisations being discussed right now and apparently the picture resizing on upload can be quite CPU heavy.

SQL dev here. Happy to help if you can point me in the direction of said conversation. My expertise is more in ETL processes for building DWs and migrating systems, but maybe I can help?

this seems to be the relevant issue: https://github.com/LemmyNet/lemmy/issues/2877

I’ve been helping on the SQL github issue. And I think the biggest performance boost would be to separate the application and postgresql onto different servers. Maybe even use a hosted postgresql temporarily, so you can scale the db at the press of a button. The app itself appears to be negligible in terms of requirements (except the picture resizing - which can also be offloaded).

But running a dedicated db on a dedicated server - as close to the bare metal as possible give by far the best performance. And increase it for more connections. Our production database at my data analytics startup runs a postgresql instance on an i9 server with 16 cores, 128GB RAM, and a fast SSD. We have 50 connections set up, and the run pgbouncer to allow up to 500 connections to share those 50. And it seamlessly runs heavy reporting and dashboards for more than 500 business customers with billions of rows of data. And costs us less than US$200pm at https://www.tailormadeservers.com/.

apparently the picture resizing on upload can be quite CPU heavy

This suggestion probably won’t help with hosted VPS, but lib nvJPEG pushes crazy theoretical numbers for image resizing.

Probably not, but it does mention a more general CUDA based solution that might be interesting to add to Pictrs. I could for example move my Pictrs instance onto a server that does have an older Nvidia GPU to accelerate stuff (to use for Libretranslate and some other less demanding ML stuff).

Edit: Ok looks like the resizing is anyways only supported on Pictrs 0.4.x which most Lemmy instances are not using yet. However this seems to use regular ImageMagick in the background, so chances are quite high that it can be made to work with OpenCL: https://imagemagick.org/script/opencl.php

And may be the bandwidth. Serve thousands and thousands need at minimum 1gbps.

Its mostly text so bandwidth shouldnt be a problem.

Is it running in a single docker container or is it spread out across multiple containers? Maybe with

docker-machineor kubernetes with horizontal scaling, it could absorb users without issue - well, except maybe cost. OVH has managed kubernetes.

So reading this correctly, it’s currently a hosting bill of 30 Euro a month?

No, thats the 8 GB memory option… if its the biggest, it should be around 112 €.

Its the one for 30 euros, Im not seeing any vps for 112. Maybe thats a different type of vps?

in vservers, it depends on the memory … and storage option for the one starting at 30…

It currently has 8gb and only uses 6gb or so. CPU is the only limitation.

It does not sound like OVHs vServers offer dedicated cores, and it is common to quickly become a bottleneck with VPS offerings across hosters and for example with the initial Mastodon hypes, i had to learn that shared hardware lesson the hard way. For the price you are currently paying, maybe something like a used dedicated (or one of the fancy AMD ones) server at Hetzner is of interest: https://www.hetzner.com/sb

Hetzner is great but they are very strict about piracy, so its not an option for lemmy.ml. For now the load has gone down so I will leave it like this, but a dedicated OVH server might be an option if load increases again.

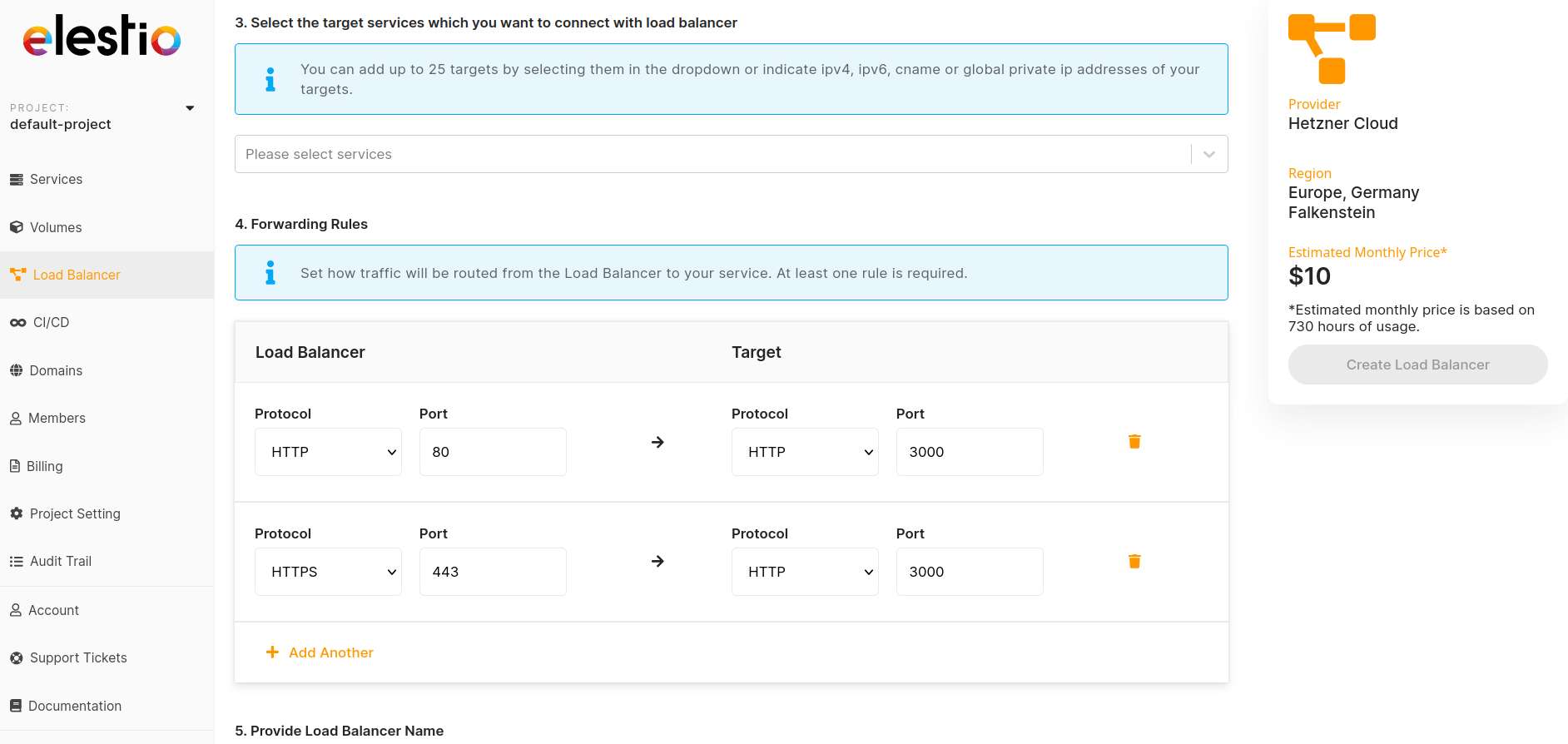

I’m relatively new to https://elest.io/pricing but it seems an easy way to scale stuff up (and down again) Dockerised, just upgrade the plan to the next tier when needed. Pay by the hour. Downgrade it again later.

There’s also a bunch of load balancer options I haven’t even begun to explore yet.

If you select Hetzner it’s EU based & powered by green energgy

Yeah, the biggest seems to be €110 per month if they don´t get a discount for committing to a longer contract duration.

Really ?

Do you have the frontend a DB serving in the same VPS? If so it would be a great time to split them. Likewise if you DB is running in a VPS, you’re likely suffering from significant steal from the hypervisor so you would benefit from switching to a dedicated box. My API calls saw a speedup of 10x just from switching from a VPS DB to a Dedicated Box DB.

I just checked OVH VPS offers and they’re shit! Even at 70 Eur dedicated on hetzner, you would gain more than double those resources without steal. I would recommend switching your DB ASAP for immediate massive gains.

If you’re wondering why you should listen to me, I built and run https://aihorde.net and are handling about 5K concurrent connections currently.

Hetzner is very strict about piracy so thats not an option. And now is almost weekend so I wont have time for a migration. Anyway there are plenty of other instances in case lemmy.ml goes down.

Edit: I also wouldnt know which size of dedicated server to choose. No matter what I pick, it will get overloaded again after a week or two.

Even if you choose Hetzner, it won’t even know it has anything to do with piracy because it will be just hosting the DB, and nobody will know where your DB is. That fear is overblown.

Likewise believe me a dedicated server is night and day from a VPS.

I’m a Reddit refugee (new number who dis?)

I’m in the processing of closing on a house/moving so i don’t have a ton of extra money or time laying around, but I work in tech as a junior Linux admin with some experience with some big data tech (HDFS, some Spark, Python, etc).

How can I help?

Good question. Seeing as your set of skills don’t quite align with Lemmy’s core componentes (Rust backend and Inferno frontend), your best bet would probably be on helping new people settle in, improving documentation, translations, discussing new ideas (like for onboarding), etc.

Any form of help is highly appreciated!

I only know enough code to break things, but I wouldn’t mind working on some documentation - I’ll go read what Lemmy needs; thanks for reminding me that anyone can chip in.

Thank you for considering helping out :D

- RoundSparrow ( @RoundSparrow@lemmy.ml ) 28•2 years ago

Based on looking at the code and the relatively small size of the data, I think there may be fundamental scaling issues with the site architecture. Software development may be far more critical than hardware at this point.

What are you seeing in the code that makes it hard do scale horizontally? I’ve never looked at Lemmy before, but I’ve done the steps of (monolithic app) -> docker -> make app stateless -> Kubernetes before and as a user, I don’t necessarily see the complexity (not saying it’s not there, but wondering what specifically in the site architecture prevents this transition)

- RoundSparrow ( @RoundSparrow@lemmy.ml ) 47•2 years ago

Right now it looks to me like Lemmy is built all around live real-time data queries of the SQL database. This may work when there are 100 postings a day and an active posting gets 80 comments… but it likely doesn’t scale very well. You tend to have to evolve to a queue system where things like comments and votes are merged into the main database in more of a batch process (Reddit does this, you will see on their status page that comments and votes have different uptime tracking than the main website).

On the output side, it seems ideal to have all data live and up to the very instant, but it can fall over under load surges (which may be a popular topic, not just an influx from the decline of Twitter or Reddit). To scale, you tend to have to make some compromises and reuse output. Some kind of intermediate layer such as every 10 seconds only regenerate the output page if there has been a new write (vote or comment change).

don’t necessarily see the complexity (not saying it’s not there

It’s the lack of complexity that’s kind of the problem. Doing direct SQL queries gets you the latest data, but it becomes a big bottleneck. Again, what might have seemed to work fine when there were only 5000 postings and 100,000 total comments in the database can start to seriously fall over when you have reached the point of accumulating 1000 times that.

Out of curiosity how would https://kbin.social/ source: https://codeberg.org/Kbin/kbin-core stand up to this kind of analysis? Is it better placed to scale?

The advantage kbin has is that it is build on a pretty well known and tested php Symphony stack. In theory Lemmy is faster due to being built in Rust, but it is much more home-grown and not as optimized yet.

That said, kbin is also still a pretty new project that hasn’t seen much actual load, so likely some dragons linger in its codebase as well.

I think it’s probably undesirable to end up with big instances. I think the best situation might be one instance that’s designed to scale. This could be lemmy.ml or another one. It can absorb these waves of new users.

However it’s also designed to expire accounts after six months.

After three months it sends users a email explaining it’s time to choose a server, it nags them to do so for a further three months. After that their ability to post is removed. They remain able to migrate their account to a new server.

After 12 months of not logging in the account is purged.

Thought on this a bit more, and I’m thinking encouraging users not to silo (and make it easy to discover instances and new communities) will probably be the best bet for scaling the network long-term.

“Smart” rate limiting of new accounts and activity per-instance might help with this organically. If a user is told that the instance they’re posting on is too active to create a new post, or too active to accept new users, and then is given alternatives, they might not outright leave.

That might work, is there some third party email app that could capture their email and let them know when registrations are open again? I know of some corporate/not privacy respecting ones such as https://kickofflabs.com/campaign-types/waitlist/ but presumably there’s a way to do that with some on-site tools?

- RoundSparrow ( @RoundSparrow@lemmy.ml ) 6•2 years ago

I don’t have any experience with that specific app, so I don’t currently know.

Do you know of any resources about this, and/or how to implement it?

I’m also willing to donate to other instances too - Beehaw, Sopuli, Lemmygrad, Lemmyone - Anything so we can have better shock absorption. If you run one of those instances, please reply and let us know how much you think you need

At the moment, I run lemmy.world using the funding of mastodon.world. If Lemmy.world might grow and need a dedicated server, I’ll try to raise funds for it separately (or create a larger .world fundraiser as I have other instances as well)

What if a bunch of groups from the Fediverse hosted a huge fundraiser and distributed the funds to where they were needed? Maybe even kept a bank of funds for when large, temporary influxes of funding are needed.

That is a very good idea, you may want to send the idea to the dev.

What kind of server and which specs is lemmy.world running on? I’m planning on setting up my own instance for a small community, but I have no idea what to brace for.

Currently a 2 cpu 4GB VPS at Hetzner, costing 5 EUR per month. With a storage volume of also 5 per month. I am monitoring this and will scale when needed. For mastodon.world we scaled it to a dedicated server with 32 cores and 256GB so we can go a long way.

Can you provide any info about the number of pageviews/month or pageviews/hr that setup can support for lemmy?

No, I have nu clue about that yet. I’ll monitor how Lemmy behaves and try to scale. Maybe after a while I can say something about it.

Thanks, appreciate the data point.

This is why I also subscribe to the communities on mastodon just in case

can you interact with mastodon on here? sorry if it’s a stupid question, i am new to the whole fediverse thing.

Yes you can :) There are no stupid question, we’re not accostumed to these kind of structures for social networks so it’s very normal to have them. I can suggest some video like this to understand better how federation works

Can you post a video or written guide that shows me [a] how to actually see lemmy content inside mastodon and [b] how to see mastodon content inside lemmy?

Explainer videos are good, but I want to see screenshots of the UI and step-by-step instructions.

+1

Speaking about communicating with lemmy from mastodon how do you know how lemmy and mastodon use authentication for creating account?Edit: the more i am learning the more my question above might not make sense.

Currently, I believe Mastodon/Misskey/Calckey/Akkoma/Friendica users can post and reply to Lemmy groups/communities. There doesn’t seem to be a way to follow them from Lemmy, though.

I think you might be able to follow Friendica groups, though.

And kbin and Lemmy have a lot of interoperability.

@top

Currently replying from my Madison account

@finickydesert@lemmy.ml

But is it possible to use the Lemmy UI with a Mastodon account? I can subscribe to Lemmy communities from within Mastodon, but it’s a very different user experience. Everything is just sorted by date, comments are not well threaded, etc.

Do I just need an account on a lemmy instance if I want a more reddit-like experience for that content?

I’m in the process of setting up a raspberry pi to host an instance, but the documentation is not super helpful. I’ll slog through it and issue a PR once co.plete so others may not have the same issues.

what kind of server could we get for 100$? 500$? 1,000$?

And how long? Days, Months…?