This is something that keeps me worried at night. Unlike other historical artefacts like pottery, vellum writing, or stone tablets, information on the Internet can just blink into nonexistence when the server hosting it goes offline. This makes it difficult for future anthropologists who want to study our history and document the different Internet epochs. For my part, I always try to send any news article I see to an archival site (like archive.ph) to help collectively preserve our present so it can still be seen by others in the future.

This is a very good point and one that is not discussed enough. Archive.org is doing amazing work but there is absolutely not enough of that and they have very limited resources.

The whole internet is extremely ephemeral, more than people realize, and it’s concerning in my opinion. Funny enough, I actually think that federation/decentralization might be the solution. A distributed system to back-up the internet that anyone can contribute storage and bandwidth to might be the only sustainable solution. I wonder.if anyone has thought about it already.

I’d argue that it can help or hurt to decentralize, depending on how it’s handled. If most sites are caching/backing up data that’s found elsewhere, that’s both good for resilience and for preservation, but if the data in question is centralized by its home server, then instead of backing up one site we’re stuck backing up a thousand, not to mention the potential issues with discovery

@strainedl0ve There is always https://ipfs.tech

This is why stuff like the internet archive exist: to try and preserve this content. The problem is that governments are trying to shut down the internet archive…

Source?

IA blog. There’s an ongoing court case. What has happened is that IA has a digital book lending service. Typically they restrict loaning to 1-user per physical book, which is the norm for digital book lending. However, at one point during the pandemic, IA did a “crisis library” event for a day or two in which they allowed infinitely many people to download/loan a book despite only having one or two copies. Publishers who own the copyright on those books then pursued a copyright violation case against IA, which has now put the entire library in jeopardy.

Theoretically, this case should only affect the digital book lending side of their library, but it may end up shutting down their service and library as a whole depending on how the court case goes. There’s been a lot of efforts by companies and governments to shut down IA, so they’d always been very cautious about their operations.

IA’s big legal issues stem from their novel ‘web archive’, and their digital book lending. They’ve also been host to roms of old software/games that may still fall under copyright. Philosophically, IMO IA did nothing wrong. However, their crisis library event did violate copyright law which kinda put them under the microscope.

Theoretically the web archive service and general digital archives of old public domain content should be safe. But we’ll have to see how things go.

This is an annoying event that happened. I don’t like that the copyright works in this way but fuck man, IA had to know that what they were doing was not even remotely in the grey area. It was a dumb move from them.

Oh wow. That’s concerning, at minimum. Thank you.

Probably referencing this lawsuit that the internet archive lost recently, related to the online library they launched during the pandemic.

oh did the court stuff pass already? I haven’t kept up.

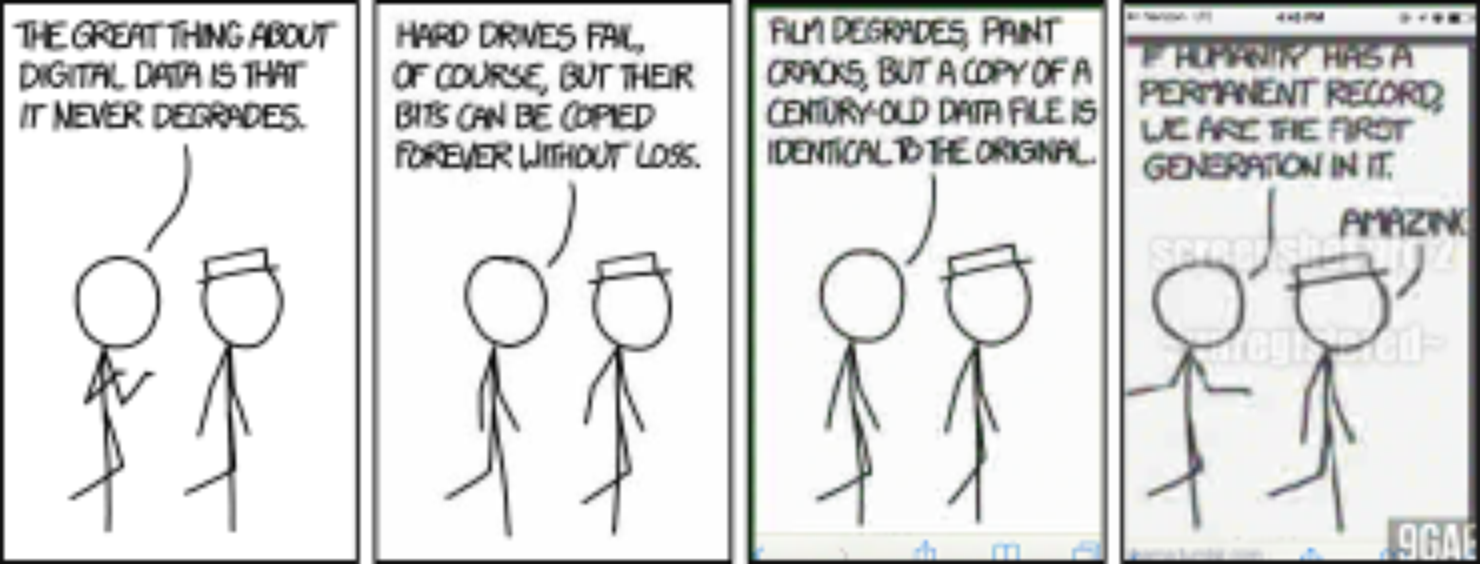

Yeah it’s funny how I always got warned about how “the internet is forever” when it comes to being care about what you post on social media, which isn’t bad advice and is kinda true, but also really kinda not true. So many things I’ve wanted to find on the internet that I experienced like 5-15 years ago are just gone without a trace.

Things you want to disappear will last forever but things you want to keep will vanish

The internet can be forever. If you mess up publicly enough, it will be forever (e.g. the aerial picture of Barbara Streisand’s villa)

It should be revised to “the Internet can be forever”. There’s no control over what persists and what doesn’t, but some things really do get copied everywhere and live on in infamy.

I worry about this too. I’ve always said and thought that I feel more like a citizen of the Internet then of my country, state, or town, so its history is important to me.

Yeah and unless someone has the exact knowledge of what hard drive to look for in a server rack somewhere, tracing an individual site’s contents that went 404 is practically impossible.

I wonder though if Cloud applications would be more robust than individual websites since they tend to be managed by larger organizations (AWS, Azure, etc).

Maybe we need a Svalbard Seed Vault extension just to house gigantic redundant RAID arrays. 😄

We’re actually well beyond RAID arrays. Google CEPH. It’s actually both super complicated and kind of simple to grow to really large storage amounts with LOTS of redundancy. It’s trickier for global scale redundancy, I think you’d need multiple clusters using something else to sync them.

I also always come back to some of the stuff freenet used to do in older versions where everyone who was a client also contributed disk space that was opaque to them, but kept a copy of what you went and looked at, and what you relayed via it for others. The more people looking at content, the more copies you ended up with in the system, and it would only lose data if no one was interested in it for some period of time.

This isn’t directly related to your comment, but you seem so smart, and I got to say that is definitely one thing I’m enjoying on this website over Reddit! :-)

Thanks _ I don’t consider myself brilliant or anything but I appreciate your compliment! The thing I like the most is that everyone is so friendly around here, yourself included ☺️

Remember a few years ago when MySpace did a faceplant during a server migration, and lost literally every single piece of music that had ever been uploaded? It was one of the single-largest losses of Internet history and it’s just… not talked about at all anymore.

Things seems to be forgotten as quickly as they were lost.

Isn’t that like a lot of older television shows? Lots of shows are lost as no one wanted to pay for tape storage.

It’s important here to think about a few large issues with this data.

First Data Storage. Other people in here are talking about decentralizing and creating fully redundant arrays so multiple copies are always online and can be easily migrated from one storage tech to the next. There’s a lot of work here not just in getting all the data, but making sure it continues to move forward as we develop new technologies and new storage techniques. This won’t be a cheap endeavor, but it’s one we should try to keep up with. Hard drives die, bit rot happens. Even off, a spinning drive will fail, as will an SSD with time. CD’s I’ve written 15+ years ago aren’t 100% readable.

Second, there’s data organization. How can you find what you want later when all you have are images of systems, backups of databases, static flat files of websites? A lot of sites now require JavaScript and other browser operations to be able to view/use the site. You’ll just have a flat file with a bunch of rendered HTML, can you really still find the one you want? Search boxes wont work, API calls will fail without the real site up and running. Databases have to be restored to be queried and if they’re relational, who will know how to connect those dots?

Third, formats. Sort of like the previous, but what happens when JPG is deprecated in favor of something better? Can you currently open up that file you wrote in 1985? Will there still be a program available to decode it? We’ll have to back those up as well… along with the OSes that they run on. And if there’s no processors left that can run on, we’ll need emulators. Obviously standards are great here, we may not forget how to read a PCX or GIF or JPG file for a while, but more niche things will definitely fall by the wayside.

Fourth, Timescale. Can we keep this stuff for 50 yrs? 100 yrs? 1000 yrs? What happens when our great*30-grand-children want to find this info. We regularly find things from a few thousand years ago here on earth with archeological digsites and such. There’s a difference between backing something up for use in a few months, and for use in a few years, what about a few hundred or thousand? Data storage will be vastly different, as will processors and displays and such. … Or what happens in a Horizon Zero Dawn scenario where all the secrets are locked up in a vault of technology left to rot that no one knows how to use because we’ve nuked ourselves into regression.

There is an experimental storage format that can store large amounts of data in a fused quartz disc. The data will not degrade with time since the bits are physically burned into the quartz.

This is fascinating, I wonder if it’ll take off eventually

Capitalism has no interest in preservation except where it is profitable. Thinking about the long-term future, archaeologist’s success and acting on it is not profitiable.

Its not just capitalism lol

Preserving things costs money/resources/time. This happens in a lot of societies.

And a non-capitalist society could decide to invest resources into preservation even if it’s not profitable.

So could a capitalist society?

Could it? Yeah, sure it could, and in some cases it will, but only if someone up the chain thinks it’s profitable. Profit motive should never dictate how archaeology is practiced.

Well stone tablets, writing, songs, culture can disappear with time, either naturally (such as erosion and weather) or through human action (such burning books, destructive investigation of ancient artifacts/ruins)

That’s why we try to keep good records.

A friend of mine talked about data preservation in the internet in a blog post, which I consider to be a good read. Sure, there’s a lot lost, but as he sais in the blog post, that’s mostly gonna be trash content, the good stuff is generally comparatively well archived as people care about it.

That is likely true for a majority of “the good stuff”, but making that determination can be tricky. Let’s consider spam emails. In our daily lives they are useless, unwanted trash. However, it’s hard to know what a future historian might be able to glean from a complete record of all spam in the world over the span of a decade. They could analyze it for social trends, countries of origin, correlation with major global events, the creation and destruction of world governments. Sometimes the garbage of the day becomes a gold mine of source material that new conclusions can be drawn from many decades down the road.

I’m not proposing that we should preserve all that junk, it’s junk, without a doubt. But asking a person today what’s going to be valuable to society tomorrow is not always possible.

I wonder if one of the things that tends to get filtered out in preservation is proportion.

When we willfully save things, it may be either representative specimens, or rarities chosen explicitly because they’re rare or “special”. However, in the end, we end up with a sample that no longer represents the original material.

Coin collections disproportionately contain rare dates. Weird and unsuccessful locomotives clutter railway museums. I expect that historians reading email archives in 2250 will see a far lower spam proportion than actually existed.

Long ago the saying was “be careful - anything you post on the internet is forever”. Well, time has certainly proven that to be false.

There’s things like /r/datahoarder (not sure if they have a new community here) that run their own petabyte storage archiving projects, some people are doing their part.

I think preservation is happening, the issue lies in accessibility. Projects like Archive.org are the public ones, but it is certain that private organizations are doing the same, just not making it public.

This is also something that is my biggest worry about the Fediverse. It has tools to deal with it, but they are self-contained. No search engine is crawling the Fediverse as far as I’ve looked, and no initiative to archive, index and overall make the content of the Fediverse accessible is currently in place, and that’s a big risk. I’m sure we will soon be seeing loss of information for this reason, if not already happened.

It’s still fairly new, I’m confident we’ll see fediverse crawlers before too long. Especially with all the attention it’s getting and more developers turning their interests here. I also saw some talk about instance mirroring that would allow backups should an instance go down. Things are in motion.

Absolutely a problem at the moment but I’m not too worried for the future tbh.

Oh yeah, my hopes are high, I already am quite fond of this new home. :)

Same! Howdy instance neighbor! 😄

during the twitter exodus my friend was fretting over not being able to access a beloved twitter account’s tweets and wanting to save them somehow. I told her if she printed them all on acid free paper she had a better chance of being able to access them in the future than trying to save them digitally

sad and true

Optical disks are also pretty good too. You can even buy special ceramic ones that shouldn’t degrade over centuries or millennia.

oh wow I have not heard of the ceramic ones but I do remember them having high hopes for the gold ones. now the problem is in the near future it might be harder to find machines that have cd drives

I’ve had some thoughts on, essentially, doing more of what historically worked; a mix of “archival quality materials” and “incentives for enthusiasts”. If we only focus on accumulating data like IA does, it is valuable, but we soak up a lot of spam in the process, and that creates some overwhelming costs.

The materials aspect generally means pushing for lower fidelity, uncomplicated formats, but this runs up against what I call the “terrarium problem”: to preserve a precious rare flower exactly as is, you can’t just take a picture, you have to package up the entire jungle. Like, we have emulators for old computing platforms, and they work, but someone has to maintain them, and if you wanted to write something new for those platforms, you are most likely dealing with a “rest of the software ecosystem” that is decades out of date. So I believe there’s an element to that of encoding valuable information in such a way that it can be meaningful without requiring the jungle - e.g. viewing text outside of its original presentation. That tracks with humanity’s oldest stories and how they contain some facts that survived generations of retellings.

The incentives part is tricky. I am crypto and NFT adjacent, and use this identity to participate in that unabashedly. But my view on what it’s good for has shifted from the market framing towards examination of historical art markets, curation and communal memory. Having a story be retold is our primary way of preserving it - and putting information on-chain(like, actually on-chain. The state of the art in this can secure a few megabytes) creates a long-term incentive for the chain to “retell its stories” as a way of justifying its valuation. It’s the same reason as why museums are more than “boring old stuff”.

When you go to a museum you’re experiencing a combination of incentives: the circumstances that built the collection, the business behind exhibiting it to the public, and the careers of the staff and curators. A blockchain’s data is a huge collection - essentially a museum in the making, with the market element as a social construct that incentivizes preservation. So I believe archival is a thing blockchains could be very good at, given the right framing. If you like something and want it to stay around, that’s a medium that will be happy to take payment to do so.